Welcome to the OpenFunction documentation site!

This is the multi-page printable view of this section. Click here to print.

v1.2 (latest)

- 1: Introduction

- 2: Getting Started

- 2.1: Installation

- 2.2: Quickstarts

- 2.2.1: Prerequisites

- 2.2.2: Create Sync Functions

- 2.2.3: Create Async Functions

- 2.2.4: Create Serverless Applications

- 2.2.5: Create Wasm Functions

- 3: Concepts

- 3.1: Function Definition

- 3.2: Function Build

- 3.3: Build Strategy

- 3.4: Function Trigger

- 3.5: Function Outputs

- 3.6: Function Scaling

- 3.7: Function Signatures

- 3.8: Wasm Functions

- 3.9: Serverless Applications

- 3.10: BaaS Integration

- 3.11: Networking

- 3.11.1: Introduction

- 3.11.2: OpenFunction Gateway

- 3.11.3: Route

- 3.11.4: Function Entrypoints

- 3.12: CI/CD

- 3.13: OpenFunction Events

- 3.13.1: Introduction

- 3.13.2: Use EventSource

- 3.13.3: Use EventBus and Trigger

- 3.13.4: Use Multiple Sources in One EventSource

- 3.13.5: Use ClusterEventBus

- 3.13.6: Use Trigger Conditions

- 4: Operations

- 4.1: Networking

- 5: Best Practices

- 5.1: Create a Knative-based Function to Interact with Middleware

- 5.2: Use SkyWalking for OpenFunction as an Observability Solution

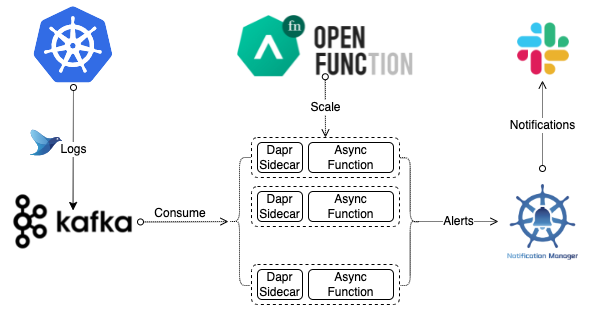

- 5.3: Elastic Log Alerting

- 6: Reference

- 6.1: Component Specifications

- 6.1.1: Function Specifications

- 6.1.2: EventSource Specifications

- 6.1.2.1: EventSource Specifications

- 6.1.2.2: Redis

- 6.1.2.3: Kafka

- 6.1.2.4: Cron

- 6.1.3: EventBus Specifications

- 6.1.3.1: EventBus Specifications

- 6.1.3.2: NATS Streaming

- 6.1.4: Trigger Specifications

- 6.2: FAQ

- 7: Contributing

1 - Introduction

Overview

OpenFunction is a cloud-native open source FaaS (Function as a Service) platform aiming to let you focus on your business logic without having to maintain the underlying runtime environment and infrastructure. You can generate event-driven and dynamically scaling Serverless workloads by simply submitting business-related source code in the form of functions.

Architecture and Design

Core Features

- Cloud agnostic and decoupled with cloud providers’ BaaS

- Pluggable architecture that allows multiple function runtimes

- Support both sync and async functions

- Unique async functions support that can consume events directly from event sources

- Support generating OCI-Compliant container images directly from function source code.

- Flexible autoscaling between 0 and N

- Advanced async function autoscaling based on event sources’ specific metrics

- Simplified BaaS integration for both sync and async functions by introducing Dapr

- Advanced function ingress & traffic management powered by K8s Gateway API (In Progress)

- Flexible and easy-to-use events management framework

License

OpenFunction is licensed under the Apache License, Version 2.0. For more information, see LICENSE.

2 - Getting Started

2.1 - Installation

This document describes how to install OpenFunction.

Prerequisites

You need to have a Kubernetes cluster.

You need to ensure your Kubernetes version meets the requirements described in the following compatibility matrix.

| OpenFunction Version | Kubernetes 1.21 | Kubernetes 1.22 | Kubernetes 1.23 | Kubernetes 1.24 | Kubernetes 1.25 | Kubernetes 1.26+ |

|---|---|---|---|---|---|---|

| HEAD | N/A | N/A | √ | √ | √ | √ |

| v1.2 | N/A | N/A | √ | √ | √ | √ |

| v1.1.x | √ | √ | √ | √ | √ | N/A |

| v1.0.x | √ | √ | √ | √ | √ | N/A |

Install OpenFunction

Now you can install OpenFunction and all its dependencies with helm charts.

The

ofnCLI install method is deprecated.

If you want to install OpenFunction in an offline environment, please refer to Install OpenFunction in an offline environment

Requirements

- Kubernetes version:

>=v1.21.0-0 - Helm version:

>=v3.6.3

Steps to install OpenFunction helm charts

Run the following command to add the OpenFunction chart repository first:

helm repo add openfunction https://openfunction.github.io/charts/ helm repo updateThen you have several options to setup OpenFunction, you can choose to:

Install all components:

kubectl create namespace openfunction helm install openfunction openfunction/openfunction -n openfunctionInstall all components and Revision Controller:

kubectl create namespace openfunction helm install openfunction openfunction/openfunction -n openfunction --set revisionController.enable=trueInstall Serving only (without build):

kubectl create namespace openfunction helm install openfunction --set global.ShipwrightBuild.enabled=false --set global.TektonPipelines.enabled=false openfunction/openfunction -n openfunctionInstall Knative sync runtime only:

kubectl create namespace openfunction helm install openfunction --set global.Keda.enabled=false openfunction/openfunction -n openfunctionInstall OpenFunction async runtime only:

kubectl create namespace openfunction helm install openfunction --set global.Contour.enabled=false --set global.KnativeServing.enabled=false openfunction/openfunction -n openfunction

Note

For more information about how to install OpenFunction with Helm, see Install OpenFunction with Helm.Run the following command to verify OpenFunction is up and running:

kubectl get po -n openfunction

Uninstall OpenFunction

Helm

If you installed OpenFunction with Helm, run the following command to uninstall OpenFunction and its dependencies.

helm uninstall openfunction -n openfunction

Note

For more information about how to uninstall OpenFunction with Helm, see Uninstall OpenFunction with Helm.Upgrade OpenFunction

helm upgrade [RELEASE_NAME] openfunction/openfunction -n openfunction

With Helm v3, CRDs created by this chart are not updated by default and should be manually updated. See also the Helm Documentation on CRDs.

Refer to helm upgrade for command documentation.

Upgrading an existing Release to a new version

From OpenFunction v0.6.0 to OpenFunction v0.7.x

There is a breaking change when upgrading from v0.6.0 to 0.7.x which requires additional manual operations.

Uninstall the Chart

First, you’ll need to uninstall the old openfunction release:

helm uninstall openfunction -n openfunction

Confirm that the component namespaces have been deleted, it will take a while:

kubectl get ns -o=jsonpath='{range .items[?(@.metadata.annotations.meta\.helm\.sh/release-name=="openfunction")]}{.metadata.name}: {.status.phase}{"\n"}{end}'

If the knative-serving namespace is in the terminating state for a long time, try running the following command and remove finalizers:

kubectl edit ingresses.networking.internal.knative.dev -n knative-serving

Upgrade OpenFunction CRDs

Then you’ll need to upgrade the new OpenFunction CRDs

kubectl apply -f https://openfunction.sh1a.qingstor.com/crds/v0.7.0/openfunction.yaml

Upgrade dependent components CRDs

You also need to upgrade the dependent components’ CRDs

You only need to deal with the components included in the existing Release.

- knative-serving CRDs

kubectl apply -f https://openfunction.sh1a.qingstor.com/crds/v0.7.0/knative-serving.yaml - shipwright-build CRDs

kubectl apply -f https://openfunction.sh1a.qingstor.com/crds/v0.7.0/shipwright-build.yaml - tekton-pipelines CRDs

kubectl apply -f https://openfunction.sh1a.qingstor.com/crds/v0.7.0/tekton-pipelines.yaml

Install new chart

helm repo update

helm install openfunction openfunction/openfunction -n openfunction

Note

For more information about how to upgrade OpenFunction with Helm, see Upgrade OpenFunction with Helm.2.2 - Quickstarts

2.2.1 - Prerequisites

Registry Credential

When building a function, you’ll need to push your function container image to a container registry like Docker Hub or Quay.io. To do that you’ll need to generate a secret for your container registry first.

You can create this secret by filling in the REGISTRY_SERVER, REGISTRY_USER and REGISTRY_PASSWORD fields, and then run the following command.

REGISTRY_SERVER=https://index.docker.io/v1/

REGISTRY_USER=<your_registry_user>

REGISTRY_PASSWORD=<your_registry_password>

kubectl create secret docker-registry push-secret \

--docker-server=$REGISTRY_SERVER \

--docker-username=$REGISTRY_USER \

--docker-password=$REGISTRY_PASSWORD

Source repository Credential

If your source code is in a private git repository, you’ll need to create a secret containing the private git repo’s username and password:

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: git-repo-secret

annotations:

build.shipwright.io/referenced.secret: "true"

type: kubernetes.io/basic-auth

stringData:

username: <cleartext username>

password: <cleartext password>

EOF

You can then reference this secret in the Function CR’s spec.build.srcRepo.credentials

apiVersion: core.openfunction.io/v1beta1

kind: Function

metadata:

name: function-sample

spec:

version: "v2.0.0"

image: "openfunctiondev/sample-go-func:v1"

imageCredentials:

name: push-secret

build:

builder: openfunction/builder-go:latest

env:

FUNC_NAME: "HelloWorld"

FUNC_CLEAR_SOURCE: "true"

srcRepo:

url: "https://github.com/OpenFunction/samples.git"

sourceSubPath: "functions/knative/hello-world-go"

revision: "main"

credentials:

name: git-repo-secret

serving:

template:

containers:

- name: function # DO NOT change this

imagePullPolicy: IfNotPresent

runtime: "knative"

Kafka

Async functions can be triggered by events in message queues like Kafka, here you can find steps to setup a Kafka cluster for demo purpose.

Install strimzi-kafka-operator in the default namespace.

helm repo add strimzi https://strimzi.io/charts/ helm install kafka-operator -n default strimzi/strimzi-kafka-operatorRun the following command to create a Kafka cluster and Kafka Topic in the default namespace. The Kafka and Zookeeper clusters created by this command have a storage type of ephemeral and are demonstrated using emptyDir.

Here we create a 1-replica Kafka server named

<kafka-server>and a 1-replica topic named<kafka-topic>with 10 partitionscat <<EOF | kubectl apply -f - apiVersion: kafka.strimzi.io/v1beta2 kind: Kafka metadata: name: <kafka-server> namespace: default spec: kafka: version: 3.3.1 replicas: 1 listeners: - name: plain port: 9092 type: internal tls: false - name: tls port: 9093 type: internal tls: true config: offsets.topic.replication.factor: 1 transaction.state.log.replication.factor: 1 transaction.state.log.min.isr: 1 default.replication.factor: 1 min.insync.replicas: 1 inter.broker.protocol.version: "3.1" storage: type: ephemeral zookeeper: replicas: 1 storage: type: ephemeral entityOperator: topicOperator: {} userOperator: {} --- apiVersion: kafka.strimzi.io/v1beta2 kind: KafkaTopic metadata: name: <kafka-topic> namespace: default labels: strimzi.io/cluster: <kafka-server> spec: partitions: 10 replicas: 1 config: cleanup.policy: delete retention.ms: 7200000 segment.bytes: 1073741824 EOFRun the following command to check Pod status and wait for Kafka and Zookeeper to run and start.

$ kubectl get po NAME READY STATUS RESTARTS AGE <kafka-server>-entity-operator-568957ff84-nmtlw 3/3 Running 0 8m42s <kafka-server>-kafka-0 1/1 Running 0 9m13s <kafka-server>-zookeeper-0 1/1 Running 0 9m46s strimzi-cluster-operator-687fdd6f77-cwmgm 1/1 Running 0 11mRun the following command to view the metadata for the Kafka cluster.

$ kafkacat -L -b <kafka-server>-kafka-brokers:9092

WasmEdge

Function now supports using WasmEdge as workload runtime, here you can find steps to setup the WasmEdge workload runtime in a Kubernetes cluster (with containerd as the container runtime).

You should run the following steps on all the nodes (or a subset of the nodes that will host the wasm workload) of your cluster.

Step 1 : Installing WasmEdge

The easiest way to install WasmEdge is to run the following command. Your system should have git and curl installed.

wget -qO- https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install.sh | bash -s -- -p /usr/local

Step 2 : Installing Container runtimes

crun

The crun project has WasmEdge support baked in. For now, the easiest approach is just download the binary and move it to /usr/local/bin/

wget https://github.com/OpenFunction/OpenFunction/releases/latest/download/crun-linux-amd64

mv crun-linux-amd64 /usr/local/bin/crun

If the above approach does not work for you, please refer to build and install a crun binary with WasmEdge support.

Step 3 : Setup CRI runtimes

Option 1: containerd

You can follow this installation guide to install containerd and this setup guide to setup containerd for Kubernetes.

First, edit the configuration /etc/containerd/config.toml, add the following section to setup crun runtime, make sure the BinaryName equal to your crun binary path

# Add crun runtime here

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.crun]

runtime_type = "io.containerd.runc.v2"

pod_annotations = ["*.wasm.*", "wasm.*", "module.wasm.image/*", "*.module.wasm.image", "module.wasm.image/variant.*"]

privileged_without_host_devices = false

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.crun.options]

BinaryName = "/usr/local/bin/crun"

Next, restart containerd service:

sudo systemctl restart containerd

Option 2: CRI-O

You can follow this installation guide to install CRI-O and this setup guide to setup CRI-O for Kubernetes.

CRI-O uses the runc runtime by default and we need to configure it to use crun instead. That is done by adding to two configuration files.

First, create a /etc/crio/crio.conf file and add the following lines as its content. It tells CRI-O to use crun by default.

[crio.runtime]

default_runtime = "crun"

The crun runtime is in turn defined in the /etc/crio/crio.conf.d/01-crio-runc.conf file.

[crio.runtime.runtimes.runc]

runtime_path = "/usr/lib/cri-o-runc/sbin/runc"

runtime_type = "oci"

runtime_root = "/run/runc"

# The above is the original content

# Add crun runtime here

[crio.runtime.runtimes.crun]

runtime_path = "/usr/local/bin/crun"

runtime_type = "oci"

runtime_root = "/run/crun"

Next, restart CRI-O to apply the configuration changes.

systemctl restart crio

2.2.2 - Create Sync Functions

Before you creating any functions, make sure you’ve installed all the prerequisites

Sync functions are functions whose inputs are payloads of HTTP requests, and the output or response are sent to the waiting client immediately after the function logic finishes processing the inputs payload. Below you can find some sync function examples in different languages:

You can find more function samples here

2.2.3 - Create Async Functions

Before you creating any functions, make sure you’ve installed all the prerequisites

Async functions are event-driven and their inputs are usually events from Non-HTTP event sources like message queues, cron triggers, MQTT brokers etc. and usually the client will not wait for an immediate response after triggering an async function by delivering an event. Below you can find some async function examples in different languages:

| Async Functions | |

|---|---|

| Go | Kafka input & HTTP output binding, Cron input & Kafka output binding, Cron input binding, Kafka input binding, Kafka pubsub |

| Nodejs | MQTT binding & pubsub |

| Python | |

| Java | Cron input & Kafka output binding, Kafka pubsub |

| DotNet |

You can find more function samples here

2.2.4 - Create Serverless Applications

Before you creating any functions, make sure you’ve installed all the prerequisites

In addition to building and running Serverless Functions, you can also build and run Serverless Applications with OpenFuntion.

Here you can find several Serverless Application examples:

| Serverless Applications | |

|---|---|

| Go | Go App with a Dockerfile |

| Java | Java App with a Dockerfile, Java App without a Dockerfile & Source Code |

You can find more info about these Serverless Applications here

2.2.5 - Create Wasm Functions

Before you creating any functions, make sure you’ve installed all the prerequisites

Here you can find wasm function examples:

| Language | Wasm Functions | Runtime |

|---|---|---|

| Rust | wasmedge-http-server | wasmedge |

You can find more info about these Function here

3 - Concepts

3.1 - Function Definition

Function

Function is the control plane of Build and Serving and it’s also the interface for users to use OpenFunction. Users needn’t to create the Build or Serving separately because Function is the only place to define a function’s Build and Serving.

Once a function is created, it will controll the lifecycle of Build and Serving without user intervention:

If

Buildis defined in a function, a builder custom resource will be created to build function’s container image once a function is deployed.If

Servingis defined in a function, a serving custom resource will be created to control a function’s serving and autoscalling.BuildandServingcan be defined together which means the function image will be built first and then it will be used in serving.Buildcan be defined withoutServing, the function is used to build image only in this case.Servingcan be defined withoutBuild, the function will use a previously built function image for serving.

Build

OpenFunction uses Shipwright and Cloud Native Buildpacks to build the function source code into container images.

Once a function is created with Build spec in it, a builder custom resource will be created which will use Shipwright to manage the build tools and strategy. The Shipwright will then use Tekton to control the process of building container images including fetching source code, generating image artifacts, and publishing images.

Serving

Once a function is created with Serving spec, a Serving custom resource will be created to control a function’s serving phase. Currently OpenFunction Serving supports two runtimes: the Knative sync runtime and the OpenFunction async runtime.

The sync runtime

For sync functions, OpenFunction currently supports using Knative Serving as runtime. And we’re planning to add another sync function runtime powered by the KEDA http-addon.

The async runtime

OpenFunction’s async runtime is an event-driven runtime which is implemented based on KEDA and Dapr. Async functions can be triggered by various event types like message queue, cronjob, and MQTT etc.

Reference

For more information, see Function Specifications.

3.2 - Function Build

Currently, OpenFunction supports building function images using Cloud Native Buildpacks without the need to create a Dockerfile.

In the meantime, you can also use OpenFunction to build Serverless Applications with Dockerfile.

Build functions by defining a build section

You can build your functions or applications from the source code in a git repo or from the source code stored locally.

Build functions from source code in a git repo

You can build a function image by simply adding a build section in the Function definition like below.

If there is a serving section defined as well, the function will be launched as soon as the build completes.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

spec:

version: "v2.0.0"

image: openfunctiondev/logs-async-handler:v1

imageCredentials:

name: push-secret

build:

builder: openfunction/builder-go:latest

env:

FUNC_NAME: "LogsHandler"

FUNC_CLEAR_SOURCE: "true"

## Customize functions framework version, valid for functions-framework-go for now

## Usually you needn't to do so because the builder will ship with the latest functions-framework

# FUNC_FRAMEWORK_VERSION: "v0.4.0"

## Use FUNC_GOPROXY to set the goproxy

# FUNC_GOPROXY: "https://goproxy.cn"

srcRepo:

url: "https://github.com/OpenFunction/samples.git"

sourceSubPath: "functions/async/logs-handler-function/"

revision: "main"

To push the function image to a container registry, you have to create a secret containing the registry’s credential and add the secret to

imageCredentials. You can refer to the prerequisites for more info.

Build functions from local source code

To build functions or applications from local source code, you’ll need to package your local source code into a container image and push this image to a container registry.

Suppose your source code is in the samples directory, you can use the following Dockerfile to build a source code bundle image.

FROM scratch

WORKDIR /

COPY samples samples/

Then you can build the source code bundle image like this:

docker build -t <your registry name>/sample-source-code:latest -f </path/to/the/dockerfile> .

docker push <your registry name>/sample-source-code:latest

It’s recommended to use the empty image

scratchas the base image to build the source code bundle image, a non-empty base image may cause the source code copy to fail.

Unlike defining the spec.build.srcRepo.url field for the git repo method, you’ll need to define the spec.build.srcRepo.bundleContainer.image field instead.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

spec:

build:

srcRepo:

bundleContainer:

image: openfunctiondev/sample-source-code:latest

sourceSubPath: "/samples/functions/async/logs-handler-function/"

The

sourceSubPathis the absolute path of the source code in the source code bundle image.

Build functions with the pack CLI

Usually it’s necessary to build function images directly from local source code especially for debug purpose or for offline environment. You can use the pack CLI for this.

Pack is a tool maintained by the Cloud Native Buildpacks project to support the use of buildpacks. It enables the following functionality:

- Build an application using buildpacks.

- Rebase application images created using buildpacks.

- Creation of various components used within the ecosystem.

Follow the instructions here to install the pack CLI tool.

You can find more details on how to use the pack CLI here.

To build OpenFunction function images from source code locally, you can follow the steps below:

Download function samples

git clone https://github.com/OpenFunction/samples.git

cd samples/functions/knative/hello-world-go

Build the function image with the pack CLI

pack build func-helloworld-go --builder openfunction/builder-go:v2.4.0-1.17 --env FUNC_NAME="HelloWorld" --env FUNC_CLEAR_SOURCE=true

Launch the function image locally

docker run --rm --env="FUNC_CONTEXT={\"name\":\"HelloWorld\",\"version\":\"v1.0.0\",\"port\":\"8080\",\"runtime\":\"Knative\"}" --env="CONTEXT_MODE=self-host" --name func-helloworld-go -p 8080:8080 func-helloworld-go

Visit the function

curl http://localhost:8080

Output example:

hello, world!

OpenFunction Builders

To build a function image with Cloud Native Buildpacks, a builder image is needed.

Here you can find builders for popular languages maintained by the OpenFunction community:

| Builders | |

|---|---|

| Go | openfunction/builder-go:v2.4.0 (openfunction/builder-go:latest) |

| Nodejs | openfunction/builder-node:v2-16.15 (openfunction/builder-node:latest) |

| Java | openfunction/builder-java:v2-11, openfunction/builder-java:v2-16, openfunction/builder-java:v2-17, openfunction/builder-java:v2-18 |

| Python | openfunction/gcp-builder:v1 |

| DotNet | openfunction/gcp-builder:v1 |

3.3 - Build Strategy

Build Strategy is used to control the build process. There are two types of strategies, ClusterBuildStrategy and BuildStrategy.

Both strategies define a group of steps necessary to control the application build process.

ClusterBuildStrategy is cluster-wide, while BuildStrategy is namespaced.

There are 4 built-in ClusterBuildStrategy in OpenFunction, you can find more details in the following sections.

openfunction

The openfunction ClusterBuildStrategy uses Buildpacks to build function images which is the default build strategy.

The following are the parameters of the openfunction ClusterBuildStrategy:

| Name | Type | Describe |

|---|---|---|

| RUN_IMAGE | string | Reference to a run image to use |

| CACHE_IMAGE | string | Cache Image is a way to preserve cache layers across different builds, which can improve build performance when building functions or applications with lots of dependencies like Java functions. |

| BASH_IMAGE | string | The bash image that the strategy used. |

| ENV_VARS | string | Environment variables to set during build-time. The formate is key1=value1,key2=value2. |

Users can set these parameters like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

spec:

build:

shipwright:

params:

RUN_IMAGE: ""

ENV_VARS: ""

buildah

The buildah ClusterBuildStrategy uses buildah to build application images.

To use buildah ClusterBuildStrategy, you can define a Function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sample-go-app

namespace: default

spec:

build:

builder: openfunction/buildah:v1.23.1

dockerfile: Dockerfile

shipwright:

strategy:

kind: ClusterBuildStrategy

name: buildah

The following are the parameters of the buildah ClusterBuildStrategy:

| Name | Type | Describe | Default |

|---|---|---|---|

| registry-search | string | The registries for searching short name images such as golang:latest, separated by commas. | docker.io,quay.io |

| registry-insecure | string | The fully-qualified name of insecure registries. An insecure registry is a registry that does not have a valid SSL certificate or only supports HTTP. | |

| registry-block | string | The registries that need to block pull access. | "" |

kaniko

The kaniko ClusterBuildStrategy uses kaniko to build application images.

To use kaniko ClusterBuildStrategy, you can define a Function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-kaniko

namespace: default

spec:

build:

builder: openfunction/kaniko-executor:v1.7.0

dockerfile: Dockerfile

shipwright:

strategy:

kind: ClusterBuildStrategy

name: kaniko

ko

The ko ClusterBuildStrategy uses ko to build Go application images.

To use ko ClusterBuildStrategy, you can define a Function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-ko

namespace: default

spec:

build:

builder: golang:1.17

dockerfile: Dockerfile

shipwright:

strategy:

kind: ClusterBuildStrategy

name: ko

The following are the parameters of ko ClusterBuildStrategy:

| Name | Type | Describe | Default |

|---|---|---|---|

| go-flags | string | Value for the GOFLAGS environment variable. | "" |

| ko-version | string | Version of ko, must be either ’latest’, or a release name from https://github.com/google/ko/releases. | "" |

| package-directory | string | The directory inside the context directory containing the main package. | “.” |

Custom Strategy

Users can customize their own strategy. To customize strategy, you can refer to this.

3.4 - Function Trigger

Function Triggers are used to define how to trigger a function. Currently, there are two kinds of triggers: HTTP Trigger, and Dapr Trigger. The default trigger is HTTP trigger.

HTTP Trigger

HTTP Trigger triggers a function with an HTTP request. You can define an HTTP Trigger for a function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

triggers:

http:

port: 8080

route:

rules:

- matches:

- path:

type: PathPrefix

value: /echo

Dapr Trigger

Dapr Trigger triggers a function with events from Dapr bindings or Dapr pubsub. You can define a function with Dapr Trigger like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

namespace: default

spec:

serving:

bindings:

kafka-receiver:

metadata:

- name: brokers

value: kafka-server-kafka-brokers:9092

- name: authRequired

value: "false"

- name: publishTopic

value: logs

- name: topics

value: logs

- name: consumerGroup

value: logs-handler

type: bindings.kafka

version: v1

triggers:

dapr:

- name: kafka-receiver

type: bindings.kafka

Function Inputs

Input is where a function can get extra input data from, Dapr State Stores is supported as Input currently.

You can define function input like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

namespace: default

spec:

serving:

triggers:

inputs:

- dapr:

name: mysql

type: state.mysql

3.5 - Function Outputs

Function Outputs

Output is a component that the function can send data to, include:

- Any Dapr Output Binding components of the Dapr Bindings Building Block

- Any Dapr Pub/sub brokers components of the Dapr Pub/sub Building Block

For example, here you can find an async function with a cron input binding and a Kafka output binding:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: cron-input-kafka-output

spec:

...

serving:

...

outputs:

- dapr:

name: kafka-server

type: bindings.kafka

operation: "create"

bindings:

kafka-server:

type: bindings.kafka

version: v1

metadata:

- name: brokers

value: "kafka-server-kafka-brokers:9092"

- name: topics

value: "sample-topic"

- name: consumerGroup

value: "bindings-with-output"

- name: publishTopic

value: "sample-topic"

- name: authRequired

value: "false"

Here is another async function example that use a Kafka Pub/sub component as input.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: autoscaling-subscriber

spec:

...

serving:

...

runtime: "async"

outputs:

- dapr:

name: kafka-server

type: pubsub.kafka

topic: "sample-topic"

pubsub:

kafka-server:

type: pubsub.kafka

version: v1

metadata:

- name: brokers

value: "kafka-server-kafka-brokers:9092"

- name: authRequired

value: "false"

- name: allowedTopics

value: "sample-topic"

- name: consumerID

value: "autoscaling-subscriber"

3.6 - Function Scaling

Scaling is one of the core features of a FaaS or Serverless platform.

OpenFunction defines function scaling in ScaleOptions and defines triggers to activate function scaling in Triggers

ScaleOptions

You can define unified function scale options for sync and async functions like below which will be valid for both sync and async functions:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

minReplicas: 0

maxReplicas: 10

Usually simply defining minReplicas and maxReplicas is not enough for async functions. You can define seperate scale options for async functions like below which will override the minReplicas and maxReplicas.

You can find more details of async function scale options in KEDA ScaleObject Spec and KEDA ScaledJob Spec.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

minReplicas: 0

maxReplicas: 10

keda:

scaledObject:

pollingInterval: 15

cooldownPeriod: 60

advanced:

horizontalPodAutoscalerConfig:

behavior:

scaleDown:

stabilizationWindowSeconds: 45

policies:

- type: Percent

value: 50

periodSeconds: 15

scaleUp:

stabilizationWindowSeconds: 0

You can also set advanced scale options for Knative sync functions too which will override the minReplicas and maxReplicas.

You can find more details of the Knative sync function scale options here

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

knative:

autoscaling.knative.dev/initial-scale: "1"

autoscaling.knative.dev/scale-down-delay: "0"

autoscaling.knative.dev/window: "60s"

autoscaling.knative.dev/panic-window-percentage: "10.0"

autoscaling.knative.dev/metric: "concurrency"

autoscaling.knative.dev/target: "100"

Triggers

Triggers define how to activate function scaling for async functions. You can use triggers defined in all KEDA scalers as OpenFunction’s trigger spec.

Sync functions’ scaling is activated by various options of HTTP requests which are already defined in the previous ScaleOption section.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

keda:

triggers:

- type: kafka

metadata:

topic: logs

bootstrapServers: kafka-server-kafka-brokers.default.svc.cluster.local:9092

consumerGroup: logs-handler

lagThreshold: "20"

3.7 - Function Signatures

Comparison of different function signatures

There’re three function signatures in OpenFunction: HTTP, CloudEvent, and OpenFunction. Let’s explain this in more detail using Go function as an example.

HTTP and CloudEvent signatures can be used to create sync functions while OpenFunction signature can be used to create both sync and async functions.

Further more OpenFunction signature can utilize various Dapr building blocks including Bindings, Pub/sub etc to access various BaaS services that helps to create more powerful functions. (Dapr State management, Configuration will be supported soon)

| HTTP | CloudEvent | OpenFunction | |

|---|---|---|---|

| Signature | func(http.ResponseWriter, *http.Request) error | func(context.Context, cloudevents.Event) error | func(ofctx.Context, []byte) (ofctx.Out, error) |

| Sync Functions | Supported | Supported | Supported |

| Async Functions | Not supported | Not supported | Supported |

| Dapr Binding | Not supported | Not supported | Supported |

| Dapr Pub/sub | Not supported | Not supported | Supported |

Samples

As you can see, OpenFunction signature is the recommended function signature, and we’re working on supporting this function signature in more language runtimes.

3.8 - Wasm Functions

WasmEdge is a lightweight, high-performance, and extensible WebAssembly runtime for cloud native, edge, and decentralized applications. It powers serverless apps, embedded functions, microservices, smart contracts, and IoT devices.

OpenFunction now supports building and running wasm functions with WasmEdge as the workload runtime.

You can find the WasmEdge Integration proposal here

Wasm container images

The wasm image containing the wasm binary is a special container image without the OS layer. An special annotation module.wasm.image/variant: compat-smart should be added to this wasm container image for a wasm runtime like WasmEdge to recognize it. This is handled automatically in OpenFunction and users only need to specify the workloadRuntime as wasmedge.

The build phase of the wasm container images

If function.spec.workloadRuntime is set to wasmedge or the function’s annotation contains module.wasm.image/variant: compat-smart,

function.spec.build.shipwright.strategy will be automatically generated based on the ClusterBuildStrategy named wasmedge in order to build a wasm container image with the module.wasm.image/variant: compat-smart annotation.

The serving phase of the wasm container images

When function.spec.workloadRuntime is set to wasmedge or the function’s annotation contains module.wasm.image/variant: compat-smart:

- If

function.spec.serving.annotationsdoes not containmodule.wasm.image/variant,module.wasm.image/variant: compat-smartwill be automatically added tofunction.spec.serving.annotations. - If

function.spec.serving.template.runtimeClassNameis not set, thisruntimeClassNamewill be automatically set to the defaultopenfunction-crun

If your kubernetes cluster is in a public cloud like

Azure, you can setspec.serving.template.runtimeClassNamemanually to override the defaultruntimeClassName.

Build and run wasm functions

To setup

WasmEdgeworkload runtime in kubernetes cluster and push images to a container registry, please refer to the prerequisites section for more info.

You can find more info about this sample Function here.

- Create a wasm function

cat <<EOF | kubectl apply -f -

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: wasmedge-http-server

spec:

workloadRuntime: wasmedge

image: openfunctiondev/wasmedge_http_server:0.1.0

imageCredentials:

name: push-secret

build:

dockerfile: Dockerfile

srcRepo:

revision: main

sourceSubPath: functions/knative/wasmedge/http-server

url: https://github.com/OpenFunction/samples

serving:

scaleOptions:

minReplicas: 0

template:

containers:

- command:

- /wasmedge_hyper_server.wasm

imagePullPolicy: IfNotPresent

livenessProbe:

initialDelaySeconds: 3

periodSeconds: 30

tcpSocket:

port: 8080

name: function

triggers:

http:

port: 8080

route:

rules:

- matches:

- path:

type: PathPrefix

value: /echo

EOF

- Check the wasm function status

kubectl get functions.core.openfunction.io -w

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING ADDRESS AGE

wasmedge-http-server Succeeded Running builder-4p2qq serving-lrd8c http://wasmedge-http-server.default.svc.cluster.local/echo 12m

- Access the wasm function

Once the BUILDSTATE becomes Succeeded and the SERVINGSTATE becomes Running, you can access this function through the address in the ADDRESS field:

kubectl run curl --image=radial/busyboxplus:curl -i --tty

curl http://wasmedge-http-server.default.svc.cluster.local/echo -X POST -d "WasmEdge"

WasmEdge

3.9 - Serverless Applications

In addition to building and running Serverless Functions, you can also build and run Serverless Applications with OpenFuntion.

OpenFunction support building source code into container images in two different ways:

- Using Cloud Native Buildpacks to build source code without a

Dockerfile - Using Buildah or BuildKit to build source code with a

Dockerfile

To push images to a container registry, you’ll need to create a secret containing the registry’s credential and add the secret to

imageCredentials. Please refer to the prerequisites section for more info.

Build and run a Serverless Application with a Dockerfile

If you already created a Dockerfile for your application like this Go Application, you can build and run this application in the serverless way like this:

- Create the sample go serverless application

cat <<EOF | kubectl apply -f -

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sample-go-app

namespace: default

spec:

build:

builder: openfunction/buildah:v1.23.1

shipwright:

strategy:

kind: ClusterBuildStrategy

name: buildah

srcRepo:

revision: main

sourceSubPath: apps/buildah/go

url: https://github.com/OpenFunction/samples.git

image: openfunctiondev/sample-go-app:v1

imageCredentials:

name: push-secret

serving:

template:

containers:

- imagePullPolicy: IfNotPresent

name: function

triggers:

http:

port: 8080

version: v1.0.0

workloadRuntime: OCIContainer

EOF

- Check the application status

You can then check the serverless app’s status by kubectl get functions.core.openfunction.io -w:

kubectl get functions.core.openfunction.io -w

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING ADDRESS AGE

sample-go-app Succeeded Running builder-jgnzp serving-q6wdp http://sample-go-app.default.svc.cluster.local/ 22m

- Access this application

Once the BUILDSTATE becomes Succeeded and the SERVINGSTATE becomes Running, you can access this Go serverless app through the address in the ADDRESS field:

kubectl run curl --image=radial/busyboxplus:curl -i --tty

curl http://sample-go-app.default.svc.cluster.local

Here you can find a Java Serverless Applications (with a Dockerfile) example.

Build and run a Serverless Application without a Dockerfile

If you hava an application without a Dockerfile like this Java Application, you can also build and run your application in the serverless way like this Java application:

- Create the sample Java serverless application

cat <<EOF | kubectl apply -f -

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sample-java-app-buildpacks

namespace: default

spec:

build:

builder: cnbs/sample-builder:alpine

srcRepo:

revision: main

sourceSubPath: apps/java-maven

url: https://github.com/buildpacks/samples.git

image: openfunction/sample-java-app-buildpacks:v1

imageCredentials:

name: push-secret

serving:

template:

containers:

- imagePullPolicy: IfNotPresent

name: function

resources: {}

triggers:

http:

port: 8080

version: v1.0.0

workloadRuntime: OCIContainer

EOF

- Check the application status

You can then check the serverless app’s status by kubectl get functions.core.openfunction.io -w:

kubectl get functions.core.openfunction.io -w

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING ADDRESS AGE

sample-java-app-buildpacks Succeeded Running builder-jgnzp serving-q6wdp http://sample-java-app-buildpacks.default.svc.cluster.local/ 22m

- Access this application

Once the BUILDSTATE becomes Succeeded and the SERVINGSTATE becomes Running, you can access this Java serverless app through the address in the ADDRESS field:

kubectl run curl --image=radial/busyboxplus:curl -i --tty

curl http://sample-java-app-buildpacks.default.svc.cluster.local

3.10 - BaaS Integration

One of the unique features of OpenFunction is its simple integration with various backend services (BaaS) through Dapr. Currently, OpenFunction supports Dapr pub/sub and bindings building blocks, and more will be added in the future.

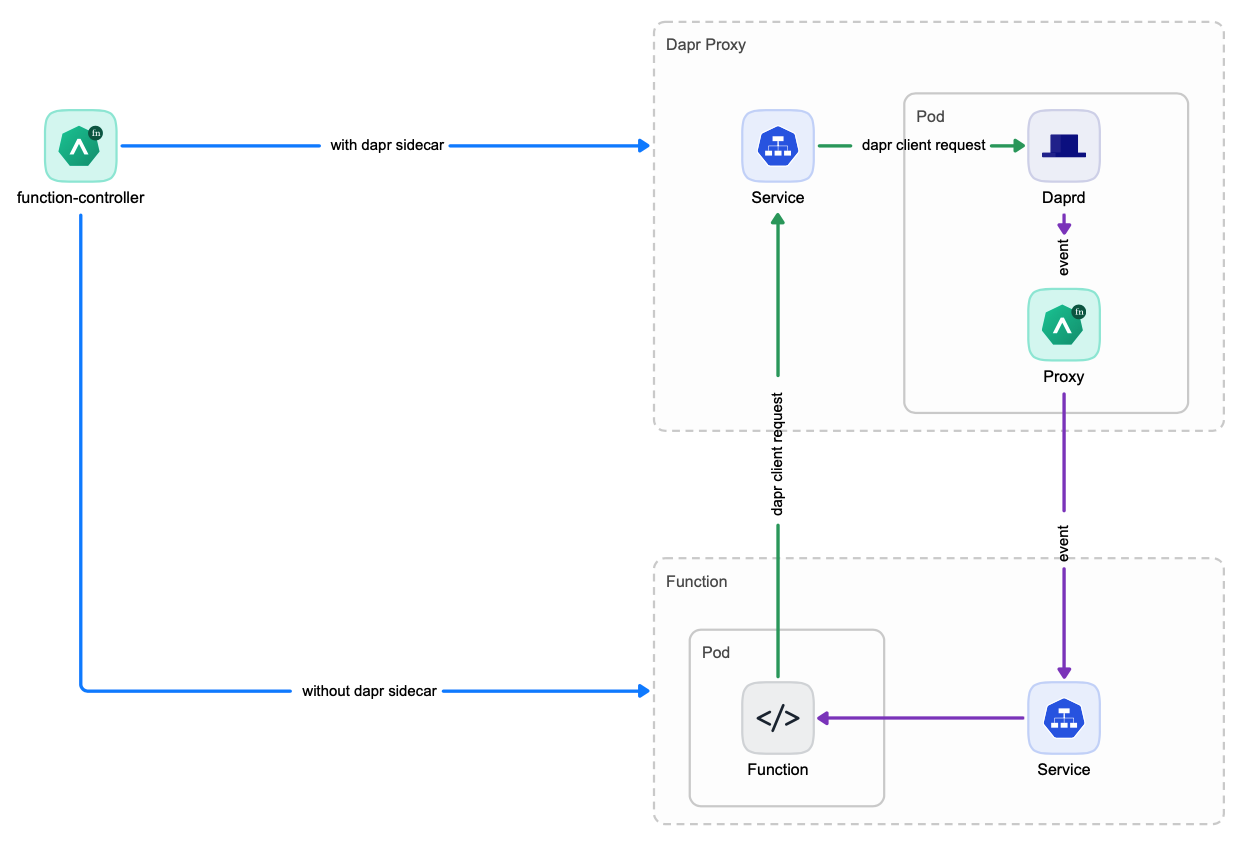

In OpenFunction v0.7.0 and versions prior to v0.7.0, OpenFunction integrates with BaaS by injecting a dapr sidecar container into each function instance’s pod, which leads to the following problems:

- The entire function instance’s launch time is slowed down by the launching of the dapr sidecar container.

- The dapr sidecar container may consume more resources than the function container itself.

To address the problems above, OpenFunction introduces the Dapr Standalone Mode in v0.8.0.

Dapr Standalone Mode

In Dapr standalone mode, one Dapr Proxy service will be created for each function which is then shared by all instances of this function. This way, there is no need to launch a seperate Dapr sidecar container for each function instance anymore which reduces the function launching time significantly.

Choose the appropriate Dapr Service Mode

So now you’ve 2 options to integrate with BaaS:

Dapr Sidecar ModeDapr Standalone Mode

You can choose the appropriate Dapr Service Mode for your functions. The Dapr Standalone Mode is the recommened and default mode. You can use Dapr Sidecar Mode if your function doesn’t scale frequently or you’ve difficulty to use the Dapr Standalone Mode.

You can control how to integrate with BaaS with 2 flags, both can be set in function’s spec.serving.annotations:

openfunction.io/enable-daprcan be set totrueorfalseopenfunction.io/dapr-service-modecan be set tostandaloneorsidecar- When

openfunction.io/enable-dapris set totrue, users can choose theDapr Service Modeby settingopenfunction.io/dapr-service-modetostandaloneorsidecar. - When

openfunction.io/enable-dapris set tofalse, the value ofopenfunction.io/dapr-service-modewill be ignored and neitherDapr SidecarnorDapr Proxy Servicewill be launched.

There’re default values for both of these two flags if they’re not set.

- The value of

openfunction.io/enable-daprwill be set totrueif it’s not defined inspec.serving.annotationsand the function definition contains eitherspec.serving.inputsorspec.serving.outputs. Otherwise it will be set tofalse. - The default value of

openfunction.io/dapr-service-modeisstandaloneif not set.

Below you can find a function example to set these two flags:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: cron-input-kafka-output

spec:

version: "v2.0.0"

image: openfunctiondev/cron-input-kafka-output:v1

imageCredentials:

name: push-secret

build:

builder: openfunction/builder-go:latest

env:

FUNC_NAME: "HandleCronInput"

FUNC_CLEAR_SOURCE: "true"

srcRepo:

url: "https://github.com/OpenFunction/samples.git"

sourceSubPath: "functions/async/bindings/cron-input-kafka-output"

revision: "main"

serving:

annotations:

openfunction.io/enable-dapr: "true"

openfunction.io/dapr-service-mode: "standalone"

template:

containers:

- name: function # DO NOT change this

imagePullPolicy: IfNotPresent

triggers:

dapr:

- name: cron

type: bindings.cron

outputs:

- dapr:

component: kafka-server

operation: "create"

bindings:

cron:

type: bindings.cron

version: v1

metadata:

- name: schedule

value: "@every 2s"

kafka-server:

type: bindings.kafka

version: v1

metadata:

- name: brokers

value: "kafka-server-kafka-brokers:9092"

- name: topics

value: "sample-topic"

- name: consumerGroup

value: "bindings-with-output"

- name: publishTopic

value: "sample-topic"

- name: authRequired

value: "false"

3.11 - Networking

3.11.1 - Introduction

Overview

Previously starting from v0.5.0, OpenFunction uses Kubernetes Ingress to provide unified entrypoints for sync functions, and a nginx ingress controller has to be installed.

With the maturity of Kubernetes Gateway API, we decided to implement OpenFunction Gateway based on the Kubernetes Gateway API to replace the previous ingress based domain method in OpenFunction v0.7.0.

You can find the OpenFunction Gateway proposal here

OpenFunction Gateway provides a more powerful and more flexible function gateway including features like:

Enable users to switch to any gateway implementations that support Kubernetes Gateway API such as Contour, Istio, Apache APISIX, Envoy Gateway (in the future) and more in an easier and vendor-neutral way.

Users can choose to install a default gateway implementation (Contour) and then define a new

gateway.networking.k8s.ioor use any existing gateway implementations in their environment and then reference an existinggateway.networking.k8s.io.Allow users to customize their own function access pattern like

hostTemplate: "{{.Name}}.{{.Namespace}}.{{.Domain}}"for host-based access.Allow users to customize their own function access pattern like

pathTemplate: "{{.Namespace}}/{{.Name}}"for path-based access.Allow users to customize each function’s route rules (host-based, path-based or both) in function definition and default route rules are provided for each function if there’re no customized route rules defined.

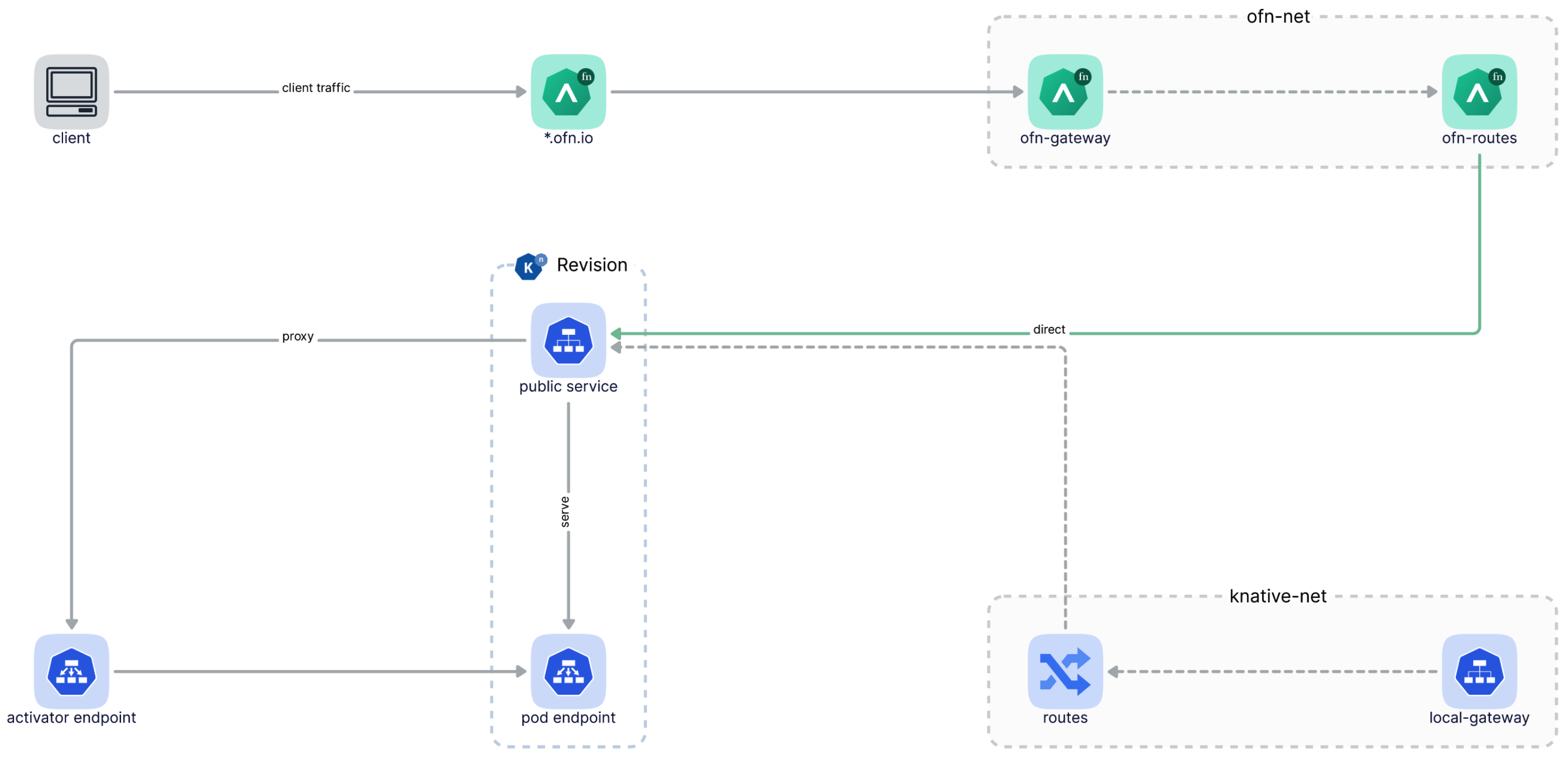

Send traffic to Knative service revisions directly without going through Knative’s own gateway anymore. You will need only OpenFunction Gateway since OpenFunction 0.7.0 to access OpenFunction sync functions, and you can ignore Knative’s domain config errors if you do not need to access Knative service directly.

Traffic splitting between function revisions (in the future)

The following diagram illustrates how client traffics go through OpenFunction Gateway and then reach a function directly:

3.11.2 - OpenFunction Gateway

Inside OpenFunction Gateway

Backed by the Kubernetes Gateway, an OpenFunction Gateway defines how users can access sync functions.

Whenever an OpenFunction Gateway is created, the gateway controller will:

Add a default listener named

ofn-http-internaltogatewaySpec.listenersif there isn’t one there.Generate

gatewaySpec.listeners.[*].hostnamebased ondomainorclusterDomain.Inject

gatewaySpec.listentersto the existingKubernetes Gatewaydefined by thegatewayRefof theOpenFunction Gateway.Create an new

Kubernetes Gatewaybased on thegatewaySpec.listentersfield ingatewayDefof theOpenFunction Gateway.Create a service named

gateway.openfunction.svc.cluster.localthat defines a unified entry for sync functions.

After an OpenFunction Gateway is deployed, you’ll be able to find the status of Kubernetes Gateway and its listeners in OpenFunction Gateway status:

status:

conditions:

- message: Gateway is scheduled

reason: Scheduled

status: "True"

type: Scheduled

- message: Valid Gateway

reason: Valid

status: "True"

type: Ready

listeners:

- attachedRoutes: 0

conditions:

- message: Valid listener

reason: Ready

status: "True"

type: Ready

name: ofn-http-internal

supportedKinds:

- group: gateway.networking.k8s.io

kind: HTTPRoute

- attachedRoutes: 0

conditions:

- message: Valid listener

reason: Ready

status: "True"

type: Ready

name: ofn-http-external

supportedKinds:

- group: gateway.networking.k8s.io

kind: HTTPRoute

The Default OpenFunction Gateway

OpenFunction Gateway uses Contour as the default Kubernetes Gateway implementation.

The following OpenFunction Gateway will be created automatically once you install OpenFunction:

apiVersion: networking.openfunction.io/v1alpha1

kind: Gateway

metadata:

name: openfunction

namespace: openfunction

spec:

domain: ofn.io

clusterDomain: cluster.local

hostTemplate: "{{.Name}}.{{.Namespace}}.{{.Domain}}"

pathTemplate: "{{.Namespace}}/{{.Name}}"

httpRouteLabelKey: "app.kubernetes.io/managed-by"

gatewayRef:

name: contour

namespace: projectcontour

gatewaySpec:

listeners:

- name: ofn-http-internal

hostname: "*.cluster.local"

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

- name: ofn-http-external

hostname: "*.ofn.io"

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

You can customize the default OpenFunction Gateway like below:

kubectl edit gateway openfunction -n openfunction

Switch to a different Kubernetes Gateway

You can switch to any gateway implementations that support Kubernetes Gateway API such as Contour, Istio, Apache APISIX, Envoy Gateway (in the future) and more in an easier and vendor-neutral way.

Here you can find more details.

Multiple OpenFunction Gateway

Multiple Gateway are meaningless for OpenFunction, we currently only support one OpenFunction Gateway.

3.11.3 - Route

What is Route?

Route is part of the Function definition. Route defines how traffic from the Gateway listener is routed to a function.

A Route specifies the Gateway to which it will attach in GatewayRef that allows it to receive traffic from the Gateway.

Once a sync Function is created, the function controller will:

- Look for the

Gatewaycalledopenfunctioninopenfunctionnamespace, then attach to thisGatewayifroute.gatewayRefis not defined in the function. - Automatically generate

route.hostnamesbased onGateway.spec.hostTemplate, ifroute.hostnamesis not defined in function. - Automatically generate

route.rulesbased onGateway.spec.pathTemplateor path of/, ifroute.rulesis not defined in function. - a

HTTPRoutecustom resource will be created based onRoute.BackendRefswill be automatically link to the corresponding Knative service revision and labelHTTPRouteLabelKeywill be added to thisHTTPRoute. - Create service

{{.Name}}.{{.Namespace}}.svc.cluster.local, this service defines an entry for the function to access within the cluster. - If the

Gatewayreferenced byroute.gatewayRefchanged, will update theHTTPRoute.

After a sync Function is deployed, you’ll be able to find Function addresses and Route status in Function’s status field, e.g:

status:

addresses:

- type: External

value: http://function-sample-serving-only.default.ofn.io/

- type: Internal

value: http://function-sample-serving-only.default.svc.cluster.local/

build:

resourceHash: "14903236521345556383"

state: Skipped

route:

conditions:

- message: Valid HTTPRoute

reason: Valid

status: "True"

type: Accepted

hosts:

- function-sample-serving-only.default.ofn.io

- function-sample-serving-only.default.svc.cluster.local

paths:

- type: PathPrefix

value: /

serving:

lastSuccessfulResourceRef: serving-znk54

resourceHash: "10715302888241374768"

resourceRef: serving-znk54

service: serving-znk54-ksvc-nbg6f

state: Running

Note

The Address of type Internal in Funtion.status provides the default method for accessing functions from within the cluster.

This internal address is not affected by the Gateway referenced by route.gatewayRef and it’s suitable for use as sink.url of EventSource.

The Address of type External in Funtion.status provides methods for accessing functions from outside the cluster (You can choose to configure Magic DNS or real DNS, please refer to access functions by the external address for more details).

This external address is generated based on route.gatewayRef, router.hostnames and route.rules. The routing mode only takes effect on this external address, The following documentation will explain how it works.

For more information about how to access functions, please refer to Function Entrypoints.

Host Based Routing

Host-based is the default routing mode. When route.hostnames is not defined,

route.hostnames will be generated based on gateway.spec.hostTemplate.

If route.rules is not defined, route.rules will be generated based on path of /.

kubectl apply -f - <<EOF

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

version: "v1.0.0"

image: "openfunctiondev/v1beta1-http:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

route:

gatewayRef:

name: openfunction

namespace: openfunction

EOF

If you are using the default OpenFunction Gateway, the function external address will be as below:

http://function-sample.default.ofn.io/

Path Based Routing

If you define route.hostnames in a function, route.rules will be generated based on gateway.spec.pathTemplate.

kubectl apply -f - <<EOF

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

version: "v1.0.0"

image: "openfunctiondev/v1beta1-http:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

route:

gatewayRef:

name: openfunction

namespace: openfunction

hostnames:

- "sample.ofn.io"

EOF

If you are using the default OpenFunction Gateway, the function external address will be as below:

http://sample.default.ofn.io/default/function-sample/

Host and Path based routing

You can define hostname and path at the same time to customize how traffic should be routed to your function.

Note

In this mode, you’ll need to resolve possible conflicts between HTTPRoutes by yourself.kubectl apply -f - <<EOF

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

version: "v1.0.0"

image: "openfunctiondev/v1beta1-http:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

route:

gatewayRef:

name: openfunction

namespace: openfunction

rules:

- matches:

- path:

type: PathPrefix

value: /v2/foo

hostnames:

- "sample.ofn.io"

EOF

If you are using the default OpenFunction Gateway, the function external address will be as below:

http://sample.default.ofn.io/v2/foo/

3.11.4 - Function Entrypoints

There are several methods to access a sync function. Let’s elaborate on this in the following section.

This documentation will assume you are using default OpenFunction Gateway and you have a sync function named

function-sample.

Access functions from within the cluster

Access functions by the internal address

OpenFunction will create this service for every sync Function: {{.Name}}.{{.Namespace}}.svc.cluster.local. This service will be used to provide the Function internal address.

Get Function internal address by running following command:

export FUNC_INTERNAL_ADDRESS=$(kubectl get function function-sample -o=jsonpath='{.status.addresses[?(@.type=="Internal")].value}')

This address provides the default method for accessing functions within the cluster, it’s suitable for use as sink.url of EventSource.

Access Function using curl in pod:

kubectl run --rm ofn-test -i --tty --image=radial/busyboxplus:curl -- curl -sv $FUNC_INTERNAL_ADDRESS

Access functions from outside the cluster

Access functions by the Kubernetes Gateway’s IP address

Get Kubernetes Gateway’s ip address:

export IP=$(kubectl get node -l "! node.kubernetes.io/exclude-from-external-load-balancers" -o=jsonpath='{.items[0].status.addresses[?(@.type=="InternalIP")].address}')

Get Function’s HOST and PATH:

export FUNC_HOST=$(kubectl get function function-sample -o=jsonpath='{.status.route.hosts[0]}')

export FUNC_PATH=$(kubectl get function function-sample -o=jsonpath='{.status.route.paths[0].value}')

Access Function using curl directly:

curl -sv -HHOST:$FUNC_HOST http://$IP$FUNC_PATH

Access functions by the external address

To access a sync function by the external address, you’ll need to configure DNS first. Either Magic DNS or real DNS works:

Magic DNS

Get Kubernetes Gateway’s ip address:

export IP=$(kubectl get node -l "! node.kubernetes.io/exclude-from-external-load-balancers" -o=jsonpath='{.items[0].status.addresses[?(@.type=="InternalIP")].address}')Replace domain defined in OpenFunction Gateway with Magic DNS:

export DOMAIN="$IP.sslip.io" kubectl patch gateway.networking.openfunction.io/openfunction -n openfunction --type merge --patch '{"spec": {"domain": "'$DOMAIN'"}}'Then, you can see

Functionexternal address inFunction’s status field:kubectl get function function-sample -oyamlstatus: addresses: - type: External value: http://function-sample.default.172.31.73.53.sslip.io/ - type: Internal value: http://function-sample.default.svc.cluster.local/ build: resourceHash: "14903236521345556383" state: Skipped route: conditions: - message: Valid HTTPRoute reason: Valid status: "True" type: Accepted hosts: - function-sample.default.172.31.73.53.sslip.io - function-sample.default.svc.cluster.local paths: - type: PathPrefix value: / serving: lastSuccessfulResourceRef: serving-t56fq resourceHash: "2638289828407595605" resourceRef: serving-t56fq service: serving-t56fq-ksvc-bv8ng state: RunningReal DNS

If you have an external IP address, you can configure a wildcard A record as your domain:

# Here example.com is the domain defined in OpenFunction Gateway *.example.com == A <external-ip>If you have a CNAME, you can configure a CNAME record as your domain:

# Here example.com is the domain defined in OpenFunction Gateway *.example.com == CNAME <external-name>Replace domain defined in OpenFunction Gateway with the domain you configured above:

export DOMAIN="example.com" kubectl patch gateway.networking.openfunction.io/openfunction -n openfunction --type merge --patch '{"spec": {"domain": "'$DOMAIN'"}}'Then, you can see

Functionexternal address inFunction’s status field:kubectl get function function-sample -oyamlstatus: addresses: - type: External value: http://function-sample.default.example.com/ - type: Internal value: http://function-sample.default.svc.cluster.local/ build: resourceHash: "14903236521345556383" state: Skipped route: conditions: - message: Valid HTTPRoute reason: Valid status: "True" type: Accepted hosts: - function-sample.default.example.com - function-sample.default.svc.cluster.local paths: - type: PathPrefix value: / serving: lastSuccessfulResourceRef: serving-t56fq resourceHash: "2638289828407595605" resourceRef: serving-t56fq service: serving-t56fq-ksvc-bv8ng state: Running

Then, you can get Function external address by running following command:

export FUNC_EXTERNAL_ADDRESS=$(kubectl get function function-sample -o=jsonpath='{.status.addresses[?(@.type=="External")].value}')

Now, you can access Function using curl directly:

curl -sv $FUNC_EXTERNAL_ADDRESS

3.12 - CI/CD

Overview

Previously users can use OpenFunction to build function or application source code into container images and then deploy the built image directly to the underlying sync/async Serverless runtime without user intervention.

But OpenFunction can neither rebuild the image and then redeploy it whenever the function or application source code changes nor redeploy the image whenever this image changes (When the image is built and pushed manually or in another function)

Starting from v1.0.0, OpenFunction adds the ability to detect source code or image changes and then rebuilt and/or redeploy the new built image in a new component called Revision Controller. The Revision Controller is able to:

- Detect source code changes in github, gitlab or gitee, then rebuild and redeploy the new built image whenever the source code changes.

- Detect the bundle container image (image containing the source code) changes, then rebuild and redeploy the new built image whenever the bundle image changes.

- Detect the function or application image changes, then redeploy the new image whenever the function or application image changes.

Quick start

Install Revision Controller

You can enable Revision Controller when installing OpenFunction by simply adding the following flag to the helm command.

--set revisionController.enable=true

You can also enable Revision Controller after OpenFunction is installed:

kubectl apply -f https://raw.githubusercontent.com/OpenFunction/revision-controller/release-1.0/deploy/bundle.yaml

The

Revision Controllerwill be installed to theopenfunctionnamespace by default. You can downloadbundle.yamland change the namespace manually if you want to install it to another namespace.

Detect source code or image changes

To detect source code or image changes, you’ll need to add revision controller switch and params like below to a function’s annotation.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

annotations:

openfunction.io/revision-controller: enable

openfunction.io/revision-controller-params: |

type: source

repo-type: github

polling-interval: 1m

name: function-http-java

namespace: default

spec:

build:

...

serving:

...

Annotations

| Key | Description |

|---|---|

| openfunction.io/revision-controller | Whether to enable revision controller to detect source code or image changes for this function, can be set to either enable or disable. |

| openfunction.io/revision-controller-params | Parameters for revision controller. |

Parameters

| Name | Description |

|---|---|

| type | The change type to detect including source, source-image, and image. |

| polling-interval | The interval to polling the image digest or source code head. |

| repo-type | The type of the git repo including github, gitlab, and gitee. Default to github. |

| base-url | The base url of the gitlab server. |

| auth-type | The auth type of the gitlab server. |

| project-id | The project id of a gitlab repo. |

| insecure-registry | If the image registy is insecure, you should set this to true. |

3.13 - OpenFunction Events

3.13.1 - Introduction

Overview

OpenFunction Events is OpenFunction’s event management framework. It provides the following core features:

- Support for triggering target functions by synchronous and asynchronous calls

- User-defined trigger judgment logic

- The components of OpenFunction Events can be driven by OpenFunction itself

Architecture

The following diagram illustrates the architecture of OpenFunction Events.

Concepts

EventSource

EventSource defines the producer of an event, such as a Kafka service, an object storage service, and even a function. It contains descriptions of these event producers and information about where to send these events.

EventSource supports the following types of event source server:

- Kafka

- Cron (scheduler)

- Redis

EventBus (ClusterEventBus)

EventBus is responsible for aggregating events and making them persistent. It contains descriptions of an event bus broker that usually is a message queue (such as NATS Streaming and Kafka), and provides these configurations for EventSource and Trigger.

EventBus handles event bus adaptation for namespace scope by default. For cluster scope, ClusterEventBus is available as an event bus adapter and takes effect when other components cannot find an EventBus under a namespace.

EventBus supports the following event bus broker:

- NATS Streaming

Trigger

Trigger is an abstraction of the purpose of an event, such as what needs to be done when a message is received. It contains the purpose of an event defined by you, which tells the trigger which EventSource it should fetch the event from and subsequently determine whether to trigger the target function according to the given conditions.

Reference

For more information, see EventSource Specifications and EventBus Specifications.

3.13.2 - Use EventSource

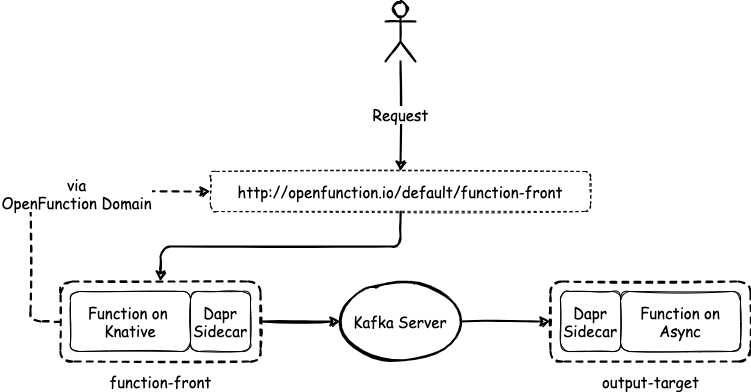

This document gives an example of how to use an event source to trigger a synchronous function.

In this example, an EventSource is defined for synchronous invocation to use the event source (a Kafka server) as an input bindings of a function (a Knative service). When the event source generates an event, it will invoke the function and get a synchronous return through the spec.sink configuration.

Create a Function

Use the following content to create a function as the EventSource Sink. For more information about how to create a function, see Create sync functions.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sink

spec:

version: "v1.0.0"

image: "openfunction/sink-sample:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

port: 8080

After the function is created, run the following command to get the URL of the function.

Note

In the URL of the function, theopenfunction is the name of the Kubernetes Service and the io is the namespace where the Kubernetes Service runs. For more information, see Namespaces of Services.$ kubectl get functions.core.openfunction.io

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING URL AGE

sink Skipped Running serving-4x5wh https://openfunction.io/default/sink 13s

Create a Kafka Cluster

Run the following commands to install strimzi-kafka-operator in the default namespace.

helm repo add strimzi https://strimzi.io/charts/ helm install kafka-operator -n default strimzi/strimzi-kafka-operatorUse the following content to create a file

kafka.yaml.apiVersion: kafka.strimzi.io/v1beta2 kind: Kafka metadata: name: kafka-server namespace: default spec: kafka: version: 3.3.1 replicas: 1 listeners: - name: plain port: 9092 type: internal tls: false - name: tls port: 9093 type: internal tls: true config: offsets.topic.replication.factor: 1 transaction.state.log.replication.factor: 1 transaction.state.log.min.isr: 1 default.replication.factor: 1 min.insync.replicas: 1 inter.broker.protocol.version: "3.1" storage: type: ephemeral zookeeper: replicas: 1 storage: type: ephemeral entityOperator: topicOperator: {} userOperator: {} --- apiVersion: kafka.strimzi.io/v1beta2 kind: KafkaTopic metadata: name: events-sample namespace: default labels: strimzi.io/cluster: kafka-server spec: partitions: 10 replicas: 1 config: retention.ms: 7200000 segment.bytes: 1073741824Run the following command to deploy a 1-replica Kafka server named

kafka-serverand 1-replica Kafka topic namedevents-samplein the default namespace. The Kafka and Zookeeper clusters created by this command have a storage type of ephemeral and are demonstrated using emptyDir.kubectl apply -f kafka.yamlRun the following command to check pod status and wait for Kafka and Zookeeper to be up and running.

$ kubectl get po NAME READY STATUS RESTARTS AGE kafka-server-entity-operator-568957ff84-nmtlw 3/3 Running 0 8m42s kafka-server-kafka-0 1/1 Running 0 9m13s kafka-server-zookeeper-0 1/1 Running 0 9m46s strimzi-cluster-operator-687fdd6f77-cwmgm 1/1 Running 0 11mRun the following command to view the metadata of the Kafka cluster.

kafkacat -L -b kafka-server-kafka-brokers:9092

Trigger a Synchronous Function

Create an EventSource

Use the following content to create an EventSource configuration file (for example,

eventsource-sink.yaml).Note

- The following example defines an event source named

my-eventsourceand mark the events generated by the specified Kafka server assample-oneevents. spec.sinkreferences the target function (Knative service) created in the prerequisites.

apiVersion: events.openfunction.io/v1alpha1 kind: EventSource metadata: name: my-eventsource spec: logLevel: "2" kafka: sample-one: brokers: "kafka-server-kafka-brokers.default.svc.cluster.local:9092" topic: "events-sample" authRequired: false sink: uri: "http://openfunction.io.svc.cluster.local/default/sink"- The following example defines an event source named

Run the following command to apply the configuration file.

kubectl apply -f eventsource-sink.yamlRun the following commands to check the results.

$ kubectl get eventsources.events.openfunction.io NAME EVENTBUS SINK STATUS my-eventsource Ready $ kubectl get components NAME AGE serving-8f6md-component-esc-kafka-sample-one-r527t 68m serving-8f6md-component-ts-my-eventsource-default-wz8jt 68m $ kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE serving-8f6md-deployment-v100-pg9sd 1/1 1 1 68mNote

In this example of triggering a synchronous function, the workflow of the EventSource controller is described as follows:

- Create an EventSource custom resource named

my-eventsource. - Create a Dapr component named

serving-xxxxx-component-esc-kafka-sample-one-xxxxxto enable the EventSource to associate with the event source. - Create a Dapr component named

serving-xxxxx-component-ts-my-eventsource-default-xxxxxenable the EventSource to associate with the sink function. - Create a Deployment named

serving-xxxxx-deployment-v100-xxxxx-xxxxxxxxxx-xxxxxfor processing events.

- Create an EventSource custom resource named

Create an event producer

To start the target function, you need to create some events to trigger the function.

Use the following content to create an event producer configuration file (for example,

events-producer.yaml).apiVersion: core.openfunction.io/v1beta1 kind: Function metadata: name: events-producer spec: version: "v1.0.0" image: openfunctiondev/v1beta1-bindings:latest serving: template: containers: - name: function imagePullPolicy: Always runtime: "async" inputs: - name: cron component: cron outputs: - name: target component: kafka-server operation: "create" bindings: cron: type: bindings.cron version: v1 metadata: - name: schedule value: "@every 2s" kafka-server: type: bindings.kafka version: v1 metadata: - name: brokers value: "kafka-server-kafka-brokers:9092" - name: topics value: "events-sample" - name: consumerGroup value: "bindings-with-output" - name: publishTopic value: "events-sample" - name: authRequired value: "false"Run the following command to apply the configuration file.

kubectl apply -f events-producer.yamlRun the following command to check the results in real time.

$ kubectl get po --watch NAME READY STATUS RESTARTS AGE serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 0/2 ContainerCreating 0 1s serving-8f6md-deployment-v100-pg9sd-6666c5577f-4rpdg 2/2 Running 0 23m serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 0/2 ContainerCreating 0 1s serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 1/2 Running 0 5s serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 2/2 Running 0 8s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 Pending 0 0s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 Pending 0 0s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 ContainerCreating 0 0s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 ContainerCreating 0 2s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 1/2 Running 0 4s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 1/2 Running 0 4s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 2/2 Running 0 4s

3.13.3 - Use EventBus and Trigger

This document gives an example of how to use EventBus and Trigger.

Prerequisites

- You need to create a function as the target function to be triggered. Please refer to Create a function for more details.

- You need to create a Kafka cluster. Please refer to Create a Kafka cluster for more details.

Deploy an NATS streaming server

Run the following commands to deploy an NATS streaming server. This document uses nats://nats.default:4222 as the access address of the NATS streaming server and stan as the cluster ID. For more information, see NATS Streaming (STAN).

helm repo add nats https://nats-io.github.io/k8s/helm/charts/

helm install nats nats/nats

helm install stan nats/stan --set stan.nats.url=nats://nats:4222

Create an OpenFuncAsync Runtime Function

Use the following content to create a configuration file (for example,

openfuncasync-function.yaml) for the target function, which is triggered by the Trigger CRD and prints the received message.apiVersion: core.openfunction.io/v1beta2 kind: Function metadata: name: trigger-target spec: version: "v1.0.0" image: openfunctiondev/v1beta1-trigger-target:latest serving: scaleOptions: keda: scaledObject: pollingInterval: 15 minReplicaCount: 0 maxReplicaCount: 10 cooldownPeriod: 30 triggers: - type: stan metadata: natsServerMonitoringEndpoint: "stan.default.svc.cluster.local:8222" queueGroup: "grp1" durableName: "ImDurable" subject: "metrics" lagThreshold: "10" triggers: dapr: - name: eventbus topic: metrics pubsub: eventbus: type: pubsub.natsstreaming version: v1 metadata: - name: natsURL value: "nats://nats.default:4222" - name: natsStreamingClusterID value: "stan" - name: subscriptionType value: "queue" - name: durableSubscriptionName value: "ImDurable" - name: consumerID value: "grp1"Run the following command to apply the configuration file.

kubectl apply -f openfuncasync-function.yaml

Create an EventBus and an EventSource

Use the following content to create a configuration file (for example,