This is the multi-page printable view of this section. Click here to print.

Concepts

- 1: Function Definition

- 2: Function Build

- 3: Build Strategy

- 4: Function Trigger

- 5: Function Outputs

- 6: Function Scaling

- 7: Function Signatures

- 8: Wasm Functions

- 9: Serverless Applications

- 10: BaaS Integration

- 11: Networking

- 11.1: Introduction

- 11.2: OpenFunction Gateway

- 11.3: Route

- 11.4: Function Entrypoints

- 12: CI/CD

- 13: OpenFunction Events

- 13.1: Introduction

- 13.2: Use EventSource

- 13.3: Use EventBus and Trigger

- 13.4: Use Multiple Sources in One EventSource

- 13.5: Use ClusterEventBus

- 13.6: Use Trigger Conditions

1 - Function Definition

Function

Function is the control plane of Build and Serving and it’s also the interface for users to use OpenFunction. Users needn’t to create the Build or Serving separately because Function is the only place to define a function’s Build and Serving.

Once a function is created, it will controll the lifecycle of Build and Serving without user intervention:

If

Buildis defined in a function, a builder custom resource will be created to build function’s container image once a function is deployed.If

Servingis defined in a function, a serving custom resource will be created to control a function’s serving and autoscalling.BuildandServingcan be defined together which means the function image will be built first and then it will be used in serving.Buildcan be defined withoutServing, the function is used to build image only in this case.Servingcan be defined withoutBuild, the function will use a previously built function image for serving.

Build

OpenFunction uses Shipwright and Cloud Native Buildpacks to build the function source code into container images.

Once a function is created with Build spec in it, a builder custom resource will be created which will use Shipwright to manage the build tools and strategy. The Shipwright will then use Tekton to control the process of building container images including fetching source code, generating image artifacts, and publishing images.

Serving

Once a function is created with Serving spec, a Serving custom resource will be created to control a function’s serving phase. Currently OpenFunction Serving supports two runtimes: the Knative sync runtime and the OpenFunction async runtime.

The sync runtime

For sync functions, OpenFunction currently supports using Knative Serving as runtime. And we’re planning to add another sync function runtime powered by the KEDA http-addon.

The async runtime

OpenFunction’s async runtime is an event-driven runtime which is implemented based on KEDA and Dapr. Async functions can be triggered by various event types like message queue, cronjob, and MQTT etc.

Reference

For more information, see Function Specifications.

2 - Function Build

Currently, OpenFunction supports building function images using Cloud Native Buildpacks without the need to create a Dockerfile.

In the meantime, you can also use OpenFunction to build Serverless Applications with Dockerfile.

Build functions by defining a build section

You can build your functions or applications from the source code in a git repo or from the source code stored locally.

Build functions from source code in a git repo

You can build a function image by simply adding a build section in the Function definition like below.

If there is a serving section defined as well, the function will be launched as soon as the build completes.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

spec:

version: "v2.0.0"

image: openfunctiondev/logs-async-handler:v1

imageCredentials:

name: push-secret

build:

builder: openfunction/builder-go:latest

env:

FUNC_NAME: "LogsHandler"

FUNC_CLEAR_SOURCE: "true"

## Customize functions framework version, valid for functions-framework-go for now

## Usually you needn't to do so because the builder will ship with the latest functions-framework

# FUNC_FRAMEWORK_VERSION: "v0.4.0"

## Use FUNC_GOPROXY to set the goproxy

# FUNC_GOPROXY: "https://goproxy.cn"

srcRepo:

url: "https://github.com/OpenFunction/samples.git"

sourceSubPath: "functions/async/logs-handler-function/"

revision: "main"

To push the function image to a container registry, you have to create a secret containing the registry’s credential and add the secret to

imageCredentials. You can refer to the prerequisites for more info.

Build functions from local source code

To build functions or applications from local source code, you’ll need to package your local source code into a container image and push this image to a container registry.

Suppose your source code is in the samples directory, you can use the following Dockerfile to build a source code bundle image.

FROM scratch

WORKDIR /

COPY samples samples/

Then you can build the source code bundle image like this:

docker build -t <your registry name>/sample-source-code:latest -f </path/to/the/dockerfile> .

docker push <your registry name>/sample-source-code:latest

It’s recommended to use the empty image

scratchas the base image to build the source code bundle image, a non-empty base image may cause the source code copy to fail.

Unlike defining the spec.build.srcRepo.url field for the git repo method, you’ll need to define the spec.build.srcRepo.bundleContainer.image field instead.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

spec:

build:

srcRepo:

bundleContainer:

image: openfunctiondev/sample-source-code:latest

sourceSubPath: "/samples/functions/async/logs-handler-function/"

The

sourceSubPathis the absolute path of the source code in the source code bundle image.

Build functions with the pack CLI

Usually it’s necessary to build function images directly from local source code especially for debug purpose or for offline environment. You can use the pack CLI for this.

Pack is a tool maintained by the Cloud Native Buildpacks project to support the use of buildpacks. It enables the following functionality:

- Build an application using buildpacks.

- Rebase application images created using buildpacks.

- Creation of various components used within the ecosystem.

Follow the instructions here to install the pack CLI tool.

You can find more details on how to use the pack CLI here.

To build OpenFunction function images from source code locally, you can follow the steps below:

Download function samples

git clone https://github.com/OpenFunction/samples.git

cd samples/functions/knative/hello-world-go

Build the function image with the pack CLI

pack build func-helloworld-go --builder openfunction/builder-go:v2.4.0-1.17 --env FUNC_NAME="HelloWorld" --env FUNC_CLEAR_SOURCE=true

Launch the function image locally

docker run --rm --env="FUNC_CONTEXT={\"name\":\"HelloWorld\",\"version\":\"v1.0.0\",\"port\":\"8080\",\"runtime\":\"Knative\"}" --env="CONTEXT_MODE=self-host" --name func-helloworld-go -p 8080:8080 func-helloworld-go

Visit the function

curl http://localhost:8080

Output example:

hello, world!

OpenFunction Builders

To build a function image with Cloud Native Buildpacks, a builder image is needed.

Here you can find builders for popular languages maintained by the OpenFunction community:

| Builders | |

|---|---|

| Go | openfunction/builder-go:v2.4.0 (openfunction/builder-go:latest) |

| Nodejs | openfunction/builder-node:v2-16.15 (openfunction/builder-node:latest) |

| Java | openfunction/builder-java:v2-11, openfunction/builder-java:v2-16, openfunction/builder-java:v2-17, openfunction/builder-java:v2-18 |

| Python | openfunction/gcp-builder:v1 |

| DotNet | openfunction/gcp-builder:v1 |

3 - Build Strategy

Build Strategy is used to control the build process. There are two types of strategies, ClusterBuildStrategy and BuildStrategy.

Both strategies define a group of steps necessary to control the application build process.

ClusterBuildStrategy is cluster-wide, while BuildStrategy is namespaced.

There are 4 built-in ClusterBuildStrategy in OpenFunction, you can find more details in the following sections.

openfunction

The openfunction ClusterBuildStrategy uses Buildpacks to build function images which is the default build strategy.

The following are the parameters of the openfunction ClusterBuildStrategy:

| Name | Type | Describe |

|---|---|---|

| RUN_IMAGE | string | Reference to a run image to use |

| CACHE_IMAGE | string | Cache Image is a way to preserve cache layers across different builds, which can improve build performance when building functions or applications with lots of dependencies like Java functions. |

| BASH_IMAGE | string | The bash image that the strategy used. |

| ENV_VARS | string | Environment variables to set during build-time. The formate is key1=value1,key2=value2. |

Users can set these parameters like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

spec:

build:

shipwright:

params:

RUN_IMAGE: ""

ENV_VARS: ""

buildah

The buildah ClusterBuildStrategy uses buildah to build application images.

To use buildah ClusterBuildStrategy, you can define a Function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sample-go-app

namespace: default

spec:

build:

builder: openfunction/buildah:v1.23.1

dockerfile: Dockerfile

shipwright:

strategy:

kind: ClusterBuildStrategy

name: buildah

The following are the parameters of the buildah ClusterBuildStrategy:

| Name | Type | Describe | Default |

|---|---|---|---|

| registry-search | string | The registries for searching short name images such as golang:latest, separated by commas. | docker.io,quay.io |

| registry-insecure | string | The fully-qualified name of insecure registries. An insecure registry is a registry that does not have a valid SSL certificate or only supports HTTP. | |

| registry-block | string | The registries that need to block pull access. | "" |

kaniko

The kaniko ClusterBuildStrategy uses kaniko to build application images.

To use kaniko ClusterBuildStrategy, you can define a Function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-kaniko

namespace: default

spec:

build:

builder: openfunction/kaniko-executor:v1.7.0

dockerfile: Dockerfile

shipwright:

strategy:

kind: ClusterBuildStrategy

name: kaniko

ko

The ko ClusterBuildStrategy uses ko to build Go application images.

To use ko ClusterBuildStrategy, you can define a Function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-ko

namespace: default

spec:

build:

builder: golang:1.17

dockerfile: Dockerfile

shipwright:

strategy:

kind: ClusterBuildStrategy

name: ko

The following are the parameters of ko ClusterBuildStrategy:

| Name | Type | Describe | Default |

|---|---|---|---|

| go-flags | string | Value for the GOFLAGS environment variable. | "" |

| ko-version | string | Version of ko, must be either ’latest’, or a release name from https://github.com/google/ko/releases. | "" |

| package-directory | string | The directory inside the context directory containing the main package. | “.” |

Custom Strategy

Users can customize their own strategy. To customize strategy, you can refer to this.

4 - Function Trigger

Function Triggers are used to define how to trigger a function. Currently, there are two kinds of triggers: HTTP Trigger, and Dapr Trigger. The default trigger is HTTP trigger.

HTTP Trigger

HTTP Trigger triggers a function with an HTTP request. You can define an HTTP Trigger for a function like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

triggers:

http:

port: 8080

route:

rules:

- matches:

- path:

type: PathPrefix

value: /echo

Dapr Trigger

Dapr Trigger triggers a function with events from Dapr bindings or Dapr pubsub. You can define a function with Dapr Trigger like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

namespace: default

spec:

serving:

bindings:

kafka-receiver:

metadata:

- name: brokers

value: kafka-server-kafka-brokers:9092

- name: authRequired

value: "false"

- name: publishTopic

value: logs

- name: topics

value: logs

- name: consumerGroup

value: logs-handler

type: bindings.kafka

version: v1

triggers:

dapr:

- name: kafka-receiver

type: bindings.kafka

Function Inputs

Input is where a function can get extra input data from, Dapr State Stores is supported as Input currently.

You can define function input like this:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: logs-async-handler

namespace: default

spec:

serving:

triggers:

inputs:

- dapr:

name: mysql

type: state.mysql

5 - Function Outputs

Function Outputs

Output is a component that the function can send data to, include:

- Any Dapr Output Binding components of the Dapr Bindings Building Block

- Any Dapr Pub/sub brokers components of the Dapr Pub/sub Building Block

For example, here you can find an async function with a cron input binding and a Kafka output binding:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: cron-input-kafka-output

spec:

...

serving:

...

outputs:

- dapr:

name: kafka-server

type: bindings.kafka

operation: "create"

bindings:

kafka-server:

type: bindings.kafka

version: v1

metadata:

- name: brokers

value: "kafka-server-kafka-brokers:9092"

- name: topics

value: "sample-topic"

- name: consumerGroup

value: "bindings-with-output"

- name: publishTopic

value: "sample-topic"

- name: authRequired

value: "false"

Here is another async function example that use a Kafka Pub/sub component as input.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: autoscaling-subscriber

spec:

...

serving:

...

runtime: "async"

outputs:

- dapr:

name: kafka-server

type: pubsub.kafka

topic: "sample-topic"

pubsub:

kafka-server:

type: pubsub.kafka

version: v1

metadata:

- name: brokers

value: "kafka-server-kafka-brokers:9092"

- name: authRequired

value: "false"

- name: allowedTopics

value: "sample-topic"

- name: consumerID

value: "autoscaling-subscriber"

6 - Function Scaling

Scaling is one of the core features of a FaaS or Serverless platform.

OpenFunction defines function scaling in ScaleOptions and defines triggers to activate function scaling in Triggers

ScaleOptions

You can define unified function scale options for sync and async functions like below which will be valid for both sync and async functions:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

minReplicas: 0

maxReplicas: 10

Usually simply defining minReplicas and maxReplicas is not enough for async functions. You can define seperate scale options for async functions like below which will override the minReplicas and maxReplicas.

You can find more details of async function scale options in KEDA ScaleObject Spec and KEDA ScaledJob Spec.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

minReplicas: 0

maxReplicas: 10

keda:

scaledObject:

pollingInterval: 15

cooldownPeriod: 60

advanced:

horizontalPodAutoscalerConfig:

behavior:

scaleDown:

stabilizationWindowSeconds: 45

policies:

- type: Percent

value: 50

periodSeconds: 15

scaleUp:

stabilizationWindowSeconds: 0

You can also set advanced scale options for Knative sync functions too which will override the minReplicas and maxReplicas.

You can find more details of the Knative sync function scale options here

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

knative:

autoscaling.knative.dev/initial-scale: "1"

autoscaling.knative.dev/scale-down-delay: "0"

autoscaling.knative.dev/window: "60s"

autoscaling.knative.dev/panic-window-percentage: "10.0"

autoscaling.knative.dev/metric: "concurrency"

autoscaling.knative.dev/target: "100"

Triggers

Triggers define how to activate function scaling for async functions. You can use triggers defined in all KEDA scalers as OpenFunction’s trigger spec.

Sync functions’ scaling is activated by various options of HTTP requests which are already defined in the previous ScaleOption section.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

serving:

scaleOptions:

keda:

triggers:

- type: kafka

metadata:

topic: logs

bootstrapServers: kafka-server-kafka-brokers.default.svc.cluster.local:9092

consumerGroup: logs-handler

lagThreshold: "20"

7 - Function Signatures

Comparison of different function signatures

There’re three function signatures in OpenFunction: HTTP, CloudEvent, and OpenFunction. Let’s explain this in more detail using Go function as an example.

HTTP and CloudEvent signatures can be used to create sync functions while OpenFunction signature can be used to create both sync and async functions.

Further more OpenFunction signature can utilize various Dapr building blocks including Bindings, Pub/sub etc to access various BaaS services that helps to create more powerful functions. (Dapr State management, Configuration will be supported soon)

| HTTP | CloudEvent | OpenFunction | |

|---|---|---|---|

| Signature | func(http.ResponseWriter, *http.Request) error | func(context.Context, cloudevents.Event) error | func(ofctx.Context, []byte) (ofctx.Out, error) |

| Sync Functions | Supported | Supported | Supported |

| Async Functions | Not supported | Not supported | Supported |

| Dapr Binding | Not supported | Not supported | Supported |

| Dapr Pub/sub | Not supported | Not supported | Supported |

Samples

As you can see, OpenFunction signature is the recommended function signature, and we’re working on supporting this function signature in more language runtimes.

8 - Wasm Functions

WasmEdge is a lightweight, high-performance, and extensible WebAssembly runtime for cloud native, edge, and decentralized applications. It powers serverless apps, embedded functions, microservices, smart contracts, and IoT devices.

OpenFunction now supports building and running wasm functions with WasmEdge as the workload runtime.

You can find the WasmEdge Integration proposal here

Wasm container images

The wasm image containing the wasm binary is a special container image without the OS layer. An special annotation module.wasm.image/variant: compat-smart should be added to this wasm container image for a wasm runtime like WasmEdge to recognize it. This is handled automatically in OpenFunction and users only need to specify the workloadRuntime as wasmedge.

The build phase of the wasm container images

If function.spec.workloadRuntime is set to wasmedge or the function’s annotation contains module.wasm.image/variant: compat-smart,

function.spec.build.shipwright.strategy will be automatically generated based on the ClusterBuildStrategy named wasmedge in order to build a wasm container image with the module.wasm.image/variant: compat-smart annotation.

The serving phase of the wasm container images

When function.spec.workloadRuntime is set to wasmedge or the function’s annotation contains module.wasm.image/variant: compat-smart:

- If

function.spec.serving.annotationsdoes not containmodule.wasm.image/variant,module.wasm.image/variant: compat-smartwill be automatically added tofunction.spec.serving.annotations. - If

function.spec.serving.template.runtimeClassNameis not set, thisruntimeClassNamewill be automatically set to the defaultopenfunction-crun

If your kubernetes cluster is in a public cloud like

Azure, you can setspec.serving.template.runtimeClassNamemanually to override the defaultruntimeClassName.

Build and run wasm functions

To setup

WasmEdgeworkload runtime in kubernetes cluster and push images to a container registry, please refer to the prerequisites section for more info.

You can find more info about this sample Function here.

- Create a wasm function

cat <<EOF | kubectl apply -f -

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: wasmedge-http-server

spec:

workloadRuntime: wasmedge

image: openfunctiondev/wasmedge_http_server:0.1.0

imageCredentials:

name: push-secret

build:

dockerfile: Dockerfile

srcRepo:

revision: main

sourceSubPath: functions/knative/wasmedge/http-server

url: https://github.com/OpenFunction/samples

serving:

scaleOptions:

minReplicas: 0

template:

containers:

- command:

- /wasmedge_hyper_server.wasm

imagePullPolicy: IfNotPresent

livenessProbe:

initialDelaySeconds: 3

periodSeconds: 30

tcpSocket:

port: 8080

name: function

triggers:

http:

port: 8080

route:

rules:

- matches:

- path:

type: PathPrefix

value: /echo

EOF

- Check the wasm function status

kubectl get functions.core.openfunction.io -w

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING ADDRESS AGE

wasmedge-http-server Succeeded Running builder-4p2qq serving-lrd8c http://wasmedge-http-server.default.svc.cluster.local/echo 12m

- Access the wasm function

Once the BUILDSTATE becomes Succeeded and the SERVINGSTATE becomes Running, you can access this function through the address in the ADDRESS field:

kubectl run curl --image=radial/busyboxplus:curl -i --tty

curl http://wasmedge-http-server.default.svc.cluster.local/echo -X POST -d "WasmEdge"

WasmEdge

9 - Serverless Applications

In addition to building and running Serverless Functions, you can also build and run Serverless Applications with OpenFuntion.

OpenFunction support building source code into container images in two different ways:

- Using Cloud Native Buildpacks to build source code without a

Dockerfile - Using Buildah or BuildKit to build source code with a

Dockerfile

To push images to a container registry, you’ll need to create a secret containing the registry’s credential and add the secret to

imageCredentials. Please refer to the prerequisites section for more info.

Build and run a Serverless Application with a Dockerfile

If you already created a Dockerfile for your application like this Go Application, you can build and run this application in the serverless way like this:

- Create the sample go serverless application

cat <<EOF | kubectl apply -f -

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sample-go-app

namespace: default

spec:

build:

builder: openfunction/buildah:v1.23.1

shipwright:

strategy:

kind: ClusterBuildStrategy

name: buildah

srcRepo:

revision: main

sourceSubPath: apps/buildah/go

url: https://github.com/OpenFunction/samples.git

image: openfunctiondev/sample-go-app:v1

imageCredentials:

name: push-secret

serving:

template:

containers:

- imagePullPolicy: IfNotPresent

name: function

triggers:

http:

port: 8080

version: v1.0.0

workloadRuntime: OCIContainer

EOF

- Check the application status

You can then check the serverless app’s status by kubectl get functions.core.openfunction.io -w:

kubectl get functions.core.openfunction.io -w

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING ADDRESS AGE

sample-go-app Succeeded Running builder-jgnzp serving-q6wdp http://sample-go-app.default.svc.cluster.local/ 22m

- Access this application

Once the BUILDSTATE becomes Succeeded and the SERVINGSTATE becomes Running, you can access this Go serverless app through the address in the ADDRESS field:

kubectl run curl --image=radial/busyboxplus:curl -i --tty

curl http://sample-go-app.default.svc.cluster.local

Here you can find a Java Serverless Applications (with a Dockerfile) example.

Build and run a Serverless Application without a Dockerfile

If you hava an application without a Dockerfile like this Java Application, you can also build and run your application in the serverless way like this Java application:

- Create the sample Java serverless application

cat <<EOF | kubectl apply -f -

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sample-java-app-buildpacks

namespace: default

spec:

build:

builder: cnbs/sample-builder:alpine

srcRepo:

revision: main

sourceSubPath: apps/java-maven

url: https://github.com/buildpacks/samples.git

image: openfunction/sample-java-app-buildpacks:v1

imageCredentials:

name: push-secret

serving:

template:

containers:

- imagePullPolicy: IfNotPresent

name: function

resources: {}

triggers:

http:

port: 8080

version: v1.0.0

workloadRuntime: OCIContainer

EOF

- Check the application status

You can then check the serverless app’s status by kubectl get functions.core.openfunction.io -w:

kubectl get functions.core.openfunction.io -w

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING ADDRESS AGE

sample-java-app-buildpacks Succeeded Running builder-jgnzp serving-q6wdp http://sample-java-app-buildpacks.default.svc.cluster.local/ 22m

- Access this application

Once the BUILDSTATE becomes Succeeded and the SERVINGSTATE becomes Running, you can access this Java serverless app through the address in the ADDRESS field:

kubectl run curl --image=radial/busyboxplus:curl -i --tty

curl http://sample-java-app-buildpacks.default.svc.cluster.local

10 - BaaS Integration

One of the unique features of OpenFunction is its simple integration with various backend services (BaaS) through Dapr. Currently, OpenFunction supports Dapr pub/sub and bindings building blocks, and more will be added in the future.

In OpenFunction v0.7.0 and versions prior to v0.7.0, OpenFunction integrates with BaaS by injecting a dapr sidecar container into each function instance’s pod, which leads to the following problems:

- The entire function instance’s launch time is slowed down by the launching of the dapr sidecar container.

- The dapr sidecar container may consume more resources than the function container itself.

To address the problems above, OpenFunction introduces the Dapr Standalone Mode in v0.8.0.

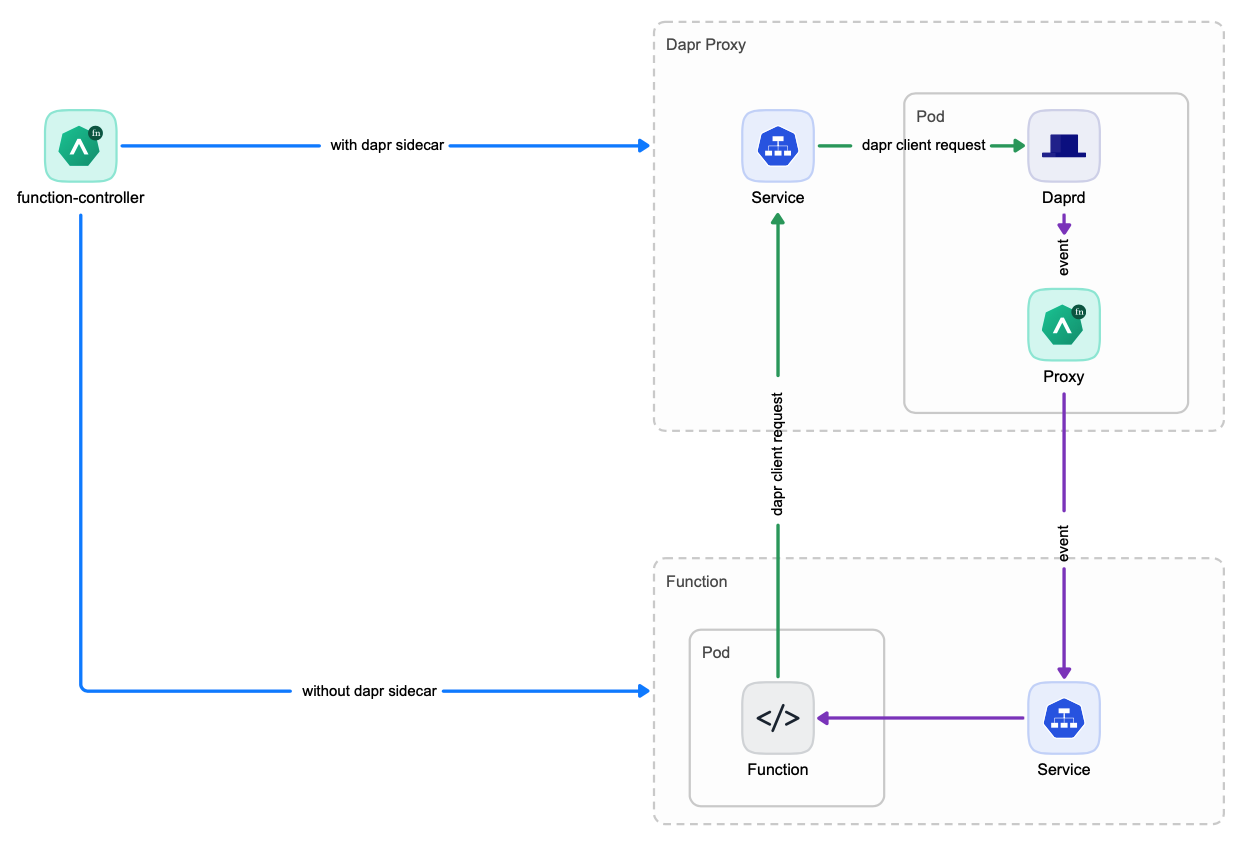

Dapr Standalone Mode

In Dapr standalone mode, one Dapr Proxy service will be created for each function which is then shared by all instances of this function. This way, there is no need to launch a seperate Dapr sidecar container for each function instance anymore which reduces the function launching time significantly.

Choose the appropriate Dapr Service Mode

So now you’ve 2 options to integrate with BaaS:

Dapr Sidecar ModeDapr Standalone Mode

You can choose the appropriate Dapr Service Mode for your functions. The Dapr Standalone Mode is the recommened and default mode. You can use Dapr Sidecar Mode if your function doesn’t scale frequently or you’ve difficulty to use the Dapr Standalone Mode.

You can control how to integrate with BaaS with 2 flags, both can be set in function’s spec.serving.annotations:

openfunction.io/enable-daprcan be set totrueorfalseopenfunction.io/dapr-service-modecan be set tostandaloneorsidecar- When

openfunction.io/enable-dapris set totrue, users can choose theDapr Service Modeby settingopenfunction.io/dapr-service-modetostandaloneorsidecar. - When

openfunction.io/enable-dapris set tofalse, the value ofopenfunction.io/dapr-service-modewill be ignored and neitherDapr SidecarnorDapr Proxy Servicewill be launched.

There’re default values for both of these two flags if they’re not set.

- The value of

openfunction.io/enable-daprwill be set totrueif it’s not defined inspec.serving.annotationsand the function definition contains eitherspec.serving.inputsorspec.serving.outputs. Otherwise it will be set tofalse. - The default value of

openfunction.io/dapr-service-modeisstandaloneif not set.

Below you can find a function example to set these two flags:

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: cron-input-kafka-output

spec:

version: "v2.0.0"

image: openfunctiondev/cron-input-kafka-output:v1

imageCredentials:

name: push-secret

build:

builder: openfunction/builder-go:latest

env:

FUNC_NAME: "HandleCronInput"

FUNC_CLEAR_SOURCE: "true"

srcRepo:

url: "https://github.com/OpenFunction/samples.git"

sourceSubPath: "functions/async/bindings/cron-input-kafka-output"

revision: "main"

serving:

annotations:

openfunction.io/enable-dapr: "true"

openfunction.io/dapr-service-mode: "standalone"

template:

containers:

- name: function # DO NOT change this

imagePullPolicy: IfNotPresent

triggers:

dapr:

- name: cron

type: bindings.cron

outputs:

- dapr:

component: kafka-server

operation: "create"

bindings:

cron:

type: bindings.cron

version: v1

metadata:

- name: schedule

value: "@every 2s"

kafka-server:

type: bindings.kafka

version: v1

metadata:

- name: brokers

value: "kafka-server-kafka-brokers:9092"

- name: topics

value: "sample-topic"

- name: consumerGroup

value: "bindings-with-output"

- name: publishTopic

value: "sample-topic"

- name: authRequired

value: "false"

11 - Networking

11.1 - Introduction

Overview

Previously starting from v0.5.0, OpenFunction uses Kubernetes Ingress to provide unified entrypoints for sync functions, and a nginx ingress controller has to be installed.

With the maturity of Kubernetes Gateway API, we decided to implement OpenFunction Gateway based on the Kubernetes Gateway API to replace the previous ingress based domain method in OpenFunction v0.7.0.

You can find the OpenFunction Gateway proposal here

OpenFunction Gateway provides a more powerful and more flexible function gateway including features like:

Enable users to switch to any gateway implementations that support Kubernetes Gateway API such as Contour, Istio, Apache APISIX, Envoy Gateway (in the future) and more in an easier and vendor-neutral way.

Users can choose to install a default gateway implementation (Contour) and then define a new

gateway.networking.k8s.ioor use any existing gateway implementations in their environment and then reference an existinggateway.networking.k8s.io.Allow users to customize their own function access pattern like

hostTemplate: "{{.Name}}.{{.Namespace}}.{{.Domain}}"for host-based access.Allow users to customize their own function access pattern like

pathTemplate: "{{.Namespace}}/{{.Name}}"for path-based access.Allow users to customize each function’s route rules (host-based, path-based or both) in function definition and default route rules are provided for each function if there’re no customized route rules defined.

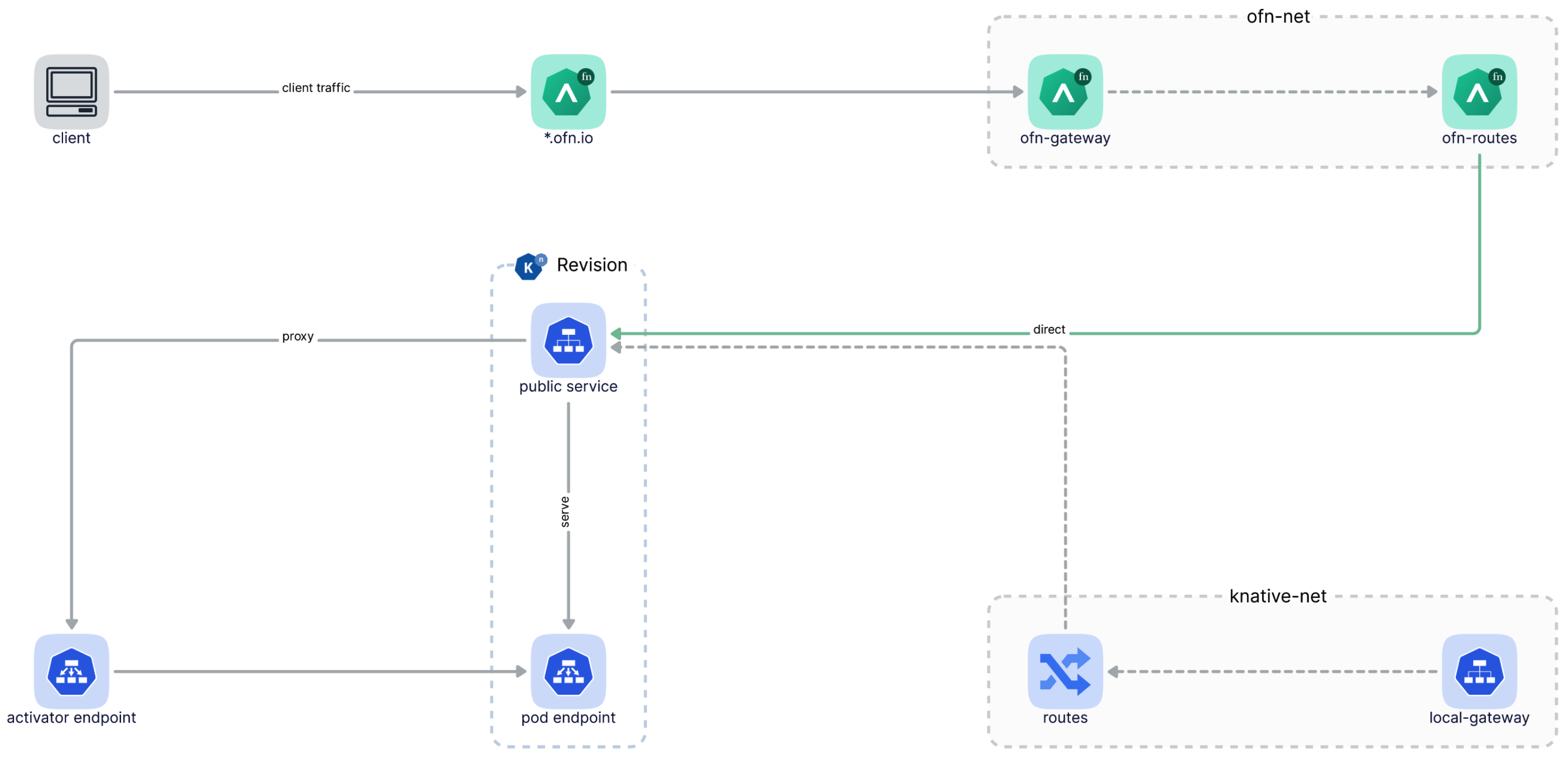

Send traffic to Knative service revisions directly without going through Knative’s own gateway anymore. You will need only OpenFunction Gateway since OpenFunction 0.7.0 to access OpenFunction sync functions, and you can ignore Knative’s domain config errors if you do not need to access Knative service directly.

Traffic splitting between function revisions (in the future)

The following diagram illustrates how client traffics go through OpenFunction Gateway and then reach a function directly:

11.2 - OpenFunction Gateway

Inside OpenFunction Gateway

Backed by the Kubernetes Gateway, an OpenFunction Gateway defines how users can access sync functions.

Whenever an OpenFunction Gateway is created, the gateway controller will:

Add a default listener named

ofn-http-internaltogatewaySpec.listenersif there isn’t one there.Generate

gatewaySpec.listeners.[*].hostnamebased ondomainorclusterDomain.Inject

gatewaySpec.listentersto the existingKubernetes Gatewaydefined by thegatewayRefof theOpenFunction Gateway.Create an new

Kubernetes Gatewaybased on thegatewaySpec.listentersfield ingatewayDefof theOpenFunction Gateway.Create a service named

gateway.openfunction.svc.cluster.localthat defines a unified entry for sync functions.

After an OpenFunction Gateway is deployed, you’ll be able to find the status of Kubernetes Gateway and its listeners in OpenFunction Gateway status:

status:

conditions:

- message: Gateway is scheduled

reason: Scheduled

status: "True"

type: Scheduled

- message: Valid Gateway

reason: Valid

status: "True"

type: Ready

listeners:

- attachedRoutes: 0

conditions:

- message: Valid listener

reason: Ready

status: "True"

type: Ready

name: ofn-http-internal

supportedKinds:

- group: gateway.networking.k8s.io

kind: HTTPRoute

- attachedRoutes: 0

conditions:

- message: Valid listener

reason: Ready

status: "True"

type: Ready

name: ofn-http-external

supportedKinds:

- group: gateway.networking.k8s.io

kind: HTTPRoute

The Default OpenFunction Gateway

OpenFunction Gateway uses Contour as the default Kubernetes Gateway implementation.

The following OpenFunction Gateway will be created automatically once you install OpenFunction:

apiVersion: networking.openfunction.io/v1alpha1

kind: Gateway

metadata:

name: openfunction

namespace: openfunction

spec:

domain: ofn.io

clusterDomain: cluster.local

hostTemplate: "{{.Name}}.{{.Namespace}}.{{.Domain}}"

pathTemplate: "{{.Namespace}}/{{.Name}}"

httpRouteLabelKey: "app.kubernetes.io/managed-by"

gatewayRef:

name: contour

namespace: projectcontour

gatewaySpec:

listeners:

- name: ofn-http-internal

hostname: "*.cluster.local"

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

- name: ofn-http-external

hostname: "*.ofn.io"

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

You can customize the default OpenFunction Gateway like below:

kubectl edit gateway openfunction -n openfunction

Switch to a different Kubernetes Gateway

You can switch to any gateway implementations that support Kubernetes Gateway API such as Contour, Istio, Apache APISIX, Envoy Gateway (in the future) and more in an easier and vendor-neutral way.

Here you can find more details.

Multiple OpenFunction Gateway

Multiple Gateway are meaningless for OpenFunction, we currently only support one OpenFunction Gateway.

11.3 - Route

What is Route?

Route is part of the Function definition. Route defines how traffic from the Gateway listener is routed to a function.

A Route specifies the Gateway to which it will attach in GatewayRef that allows it to receive traffic from the Gateway.

Once a sync Function is created, the function controller will:

- Look for the

Gatewaycalledopenfunctioninopenfunctionnamespace, then attach to thisGatewayifroute.gatewayRefis not defined in the function. - Automatically generate

route.hostnamesbased onGateway.spec.hostTemplate, ifroute.hostnamesis not defined in function. - Automatically generate

route.rulesbased onGateway.spec.pathTemplateor path of/, ifroute.rulesis not defined in function. - a

HTTPRoutecustom resource will be created based onRoute.BackendRefswill be automatically link to the corresponding Knative service revision and labelHTTPRouteLabelKeywill be added to thisHTTPRoute. - Create service

{{.Name}}.{{.Namespace}}.svc.cluster.local, this service defines an entry for the function to access within the cluster. - If the

Gatewayreferenced byroute.gatewayRefchanged, will update theHTTPRoute.

After a sync Function is deployed, you’ll be able to find Function addresses and Route status in Function’s status field, e.g:

status:

addresses:

- type: External

value: http://function-sample-serving-only.default.ofn.io/

- type: Internal

value: http://function-sample-serving-only.default.svc.cluster.local/

build:

resourceHash: "14903236521345556383"

state: Skipped

route:

conditions:

- message: Valid HTTPRoute

reason: Valid

status: "True"

type: Accepted

hosts:

- function-sample-serving-only.default.ofn.io

- function-sample-serving-only.default.svc.cluster.local

paths:

- type: PathPrefix

value: /

serving:

lastSuccessfulResourceRef: serving-znk54

resourceHash: "10715302888241374768"

resourceRef: serving-znk54

service: serving-znk54-ksvc-nbg6f

state: Running

Note

The Address of type Internal in Funtion.status provides the default method for accessing functions from within the cluster.

This internal address is not affected by the Gateway referenced by route.gatewayRef and it’s suitable for use as sink.url of EventSource.

The Address of type External in Funtion.status provides methods for accessing functions from outside the cluster (You can choose to configure Magic DNS or real DNS, please refer to access functions by the external address for more details).

This external address is generated based on route.gatewayRef, router.hostnames and route.rules. The routing mode only takes effect on this external address, The following documentation will explain how it works.

For more information about how to access functions, please refer to Function Entrypoints.

Host Based Routing

Host-based is the default routing mode. When route.hostnames is not defined,

route.hostnames will be generated based on gateway.spec.hostTemplate.

If route.rules is not defined, route.rules will be generated based on path of /.

kubectl apply -f - <<EOF

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

version: "v1.0.0"

image: "openfunctiondev/v1beta1-http:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

route:

gatewayRef:

name: openfunction

namespace: openfunction

EOF

If you are using the default OpenFunction Gateway, the function external address will be as below:

http://function-sample.default.ofn.io/

Path Based Routing

If you define route.hostnames in a function, route.rules will be generated based on gateway.spec.pathTemplate.

kubectl apply -f - <<EOF

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

version: "v1.0.0"

image: "openfunctiondev/v1beta1-http:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

route:

gatewayRef:

name: openfunction

namespace: openfunction

hostnames:

- "sample.ofn.io"

EOF

If you are using the default OpenFunction Gateway, the function external address will be as below:

http://sample.default.ofn.io/default/function-sample/

Host and Path based routing

You can define hostname and path at the same time to customize how traffic should be routed to your function.

Note

In this mode, you’ll need to resolve possible conflicts between HTTPRoutes by yourself.kubectl apply -f - <<EOF

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: function-sample

spec:

version: "v1.0.0"

image: "openfunctiondev/v1beta1-http:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

route:

gatewayRef:

name: openfunction

namespace: openfunction

rules:

- matches:

- path:

type: PathPrefix

value: /v2/foo

hostnames:

- "sample.ofn.io"

EOF

If you are using the default OpenFunction Gateway, the function external address will be as below:

http://sample.default.ofn.io/v2/foo/

11.4 - Function Entrypoints

There are several methods to access a sync function. Let’s elaborate on this in the following section.

This documentation will assume you are using default OpenFunction Gateway and you have a sync function named

function-sample.

Access functions from within the cluster

Access functions by the internal address

OpenFunction will create this service for every sync Function: {{.Name}}.{{.Namespace}}.svc.cluster.local. This service will be used to provide the Function internal address.

Get Function internal address by running following command:

export FUNC_INTERNAL_ADDRESS=$(kubectl get function function-sample -o=jsonpath='{.status.addresses[?(@.type=="Internal")].value}')

This address provides the default method for accessing functions within the cluster, it’s suitable for use as sink.url of EventSource.

Access Function using curl in pod:

kubectl run --rm ofn-test -i --tty --image=radial/busyboxplus:curl -- curl -sv $FUNC_INTERNAL_ADDRESS

Access functions from outside the cluster

Access functions by the Kubernetes Gateway’s IP address

Get Kubernetes Gateway’s ip address:

export IP=$(kubectl get node -l "! node.kubernetes.io/exclude-from-external-load-balancers" -o=jsonpath='{.items[0].status.addresses[?(@.type=="InternalIP")].address}')

Get Function’s HOST and PATH:

export FUNC_HOST=$(kubectl get function function-sample -o=jsonpath='{.status.route.hosts[0]}')

export FUNC_PATH=$(kubectl get function function-sample -o=jsonpath='{.status.route.paths[0].value}')

Access Function using curl directly:

curl -sv -HHOST:$FUNC_HOST http://$IP$FUNC_PATH

Access functions by the external address

To access a sync function by the external address, you’ll need to configure DNS first. Either Magic DNS or real DNS works:

Magic DNS

Get Kubernetes Gateway’s ip address:

export IP=$(kubectl get node -l "! node.kubernetes.io/exclude-from-external-load-balancers" -o=jsonpath='{.items[0].status.addresses[?(@.type=="InternalIP")].address}')Replace domain defined in OpenFunction Gateway with Magic DNS:

export DOMAIN="$IP.sslip.io" kubectl patch gateway.networking.openfunction.io/openfunction -n openfunction --type merge --patch '{"spec": {"domain": "'$DOMAIN'"}}'Then, you can see

Functionexternal address inFunction’s status field:kubectl get function function-sample -oyamlstatus: addresses: - type: External value: http://function-sample.default.172.31.73.53.sslip.io/ - type: Internal value: http://function-sample.default.svc.cluster.local/ build: resourceHash: "14903236521345556383" state: Skipped route: conditions: - message: Valid HTTPRoute reason: Valid status: "True" type: Accepted hosts: - function-sample.default.172.31.73.53.sslip.io - function-sample.default.svc.cluster.local paths: - type: PathPrefix value: / serving: lastSuccessfulResourceRef: serving-t56fq resourceHash: "2638289828407595605" resourceRef: serving-t56fq service: serving-t56fq-ksvc-bv8ng state: RunningReal DNS

If you have an external IP address, you can configure a wildcard A record as your domain:

# Here example.com is the domain defined in OpenFunction Gateway *.example.com == A <external-ip>If you have a CNAME, you can configure a CNAME record as your domain:

# Here example.com is the domain defined in OpenFunction Gateway *.example.com == CNAME <external-name>Replace domain defined in OpenFunction Gateway with the domain you configured above:

export DOMAIN="example.com" kubectl patch gateway.networking.openfunction.io/openfunction -n openfunction --type merge --patch '{"spec": {"domain": "'$DOMAIN'"}}'Then, you can see

Functionexternal address inFunction’s status field:kubectl get function function-sample -oyamlstatus: addresses: - type: External value: http://function-sample.default.example.com/ - type: Internal value: http://function-sample.default.svc.cluster.local/ build: resourceHash: "14903236521345556383" state: Skipped route: conditions: - message: Valid HTTPRoute reason: Valid status: "True" type: Accepted hosts: - function-sample.default.example.com - function-sample.default.svc.cluster.local paths: - type: PathPrefix value: / serving: lastSuccessfulResourceRef: serving-t56fq resourceHash: "2638289828407595605" resourceRef: serving-t56fq service: serving-t56fq-ksvc-bv8ng state: Running

Then, you can get Function external address by running following command:

export FUNC_EXTERNAL_ADDRESS=$(kubectl get function function-sample -o=jsonpath='{.status.addresses[?(@.type=="External")].value}')

Now, you can access Function using curl directly:

curl -sv $FUNC_EXTERNAL_ADDRESS

12 - CI/CD

Overview

Previously users can use OpenFunction to build function or application source code into container images and then deploy the built image directly to the underlying sync/async Serverless runtime without user intervention.

But OpenFunction can neither rebuild the image and then redeploy it whenever the function or application source code changes nor redeploy the image whenever this image changes (When the image is built and pushed manually or in another function)

Starting from v1.0.0, OpenFunction adds the ability to detect source code or image changes and then rebuilt and/or redeploy the new built image in a new component called Revision Controller. The Revision Controller is able to:

- Detect source code changes in github, gitlab or gitee, then rebuild and redeploy the new built image whenever the source code changes.

- Detect the bundle container image (image containing the source code) changes, then rebuild and redeploy the new built image whenever the bundle image changes.

- Detect the function or application image changes, then redeploy the new image whenever the function or application image changes.

Quick start

Install Revision Controller

You can enable Revision Controller when installing OpenFunction by simply adding the following flag to the helm command.

--set revisionController.enable=true

You can also enable Revision Controller after OpenFunction is installed:

kubectl apply -f https://raw.githubusercontent.com/OpenFunction/revision-controller/release-1.0/deploy/bundle.yaml

The

Revision Controllerwill be installed to theopenfunctionnamespace by default. You can downloadbundle.yamland change the namespace manually if you want to install it to another namespace.

Detect source code or image changes

To detect source code or image changes, you’ll need to add revision controller switch and params like below to a function’s annotation.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

annotations:

openfunction.io/revision-controller: enable

openfunction.io/revision-controller-params: |

type: source

repo-type: github

polling-interval: 1m

name: function-http-java

namespace: default

spec:

build:

...

serving:

...

Annotations

| Key | Description |

|---|---|

| openfunction.io/revision-controller | Whether to enable revision controller to detect source code or image changes for this function, can be set to either enable or disable. |

| openfunction.io/revision-controller-params | Parameters for revision controller. |

Parameters

| Name | Description |

|---|---|

| type | The change type to detect including source, source-image, and image. |

| polling-interval | The interval to polling the image digest or source code head. |

| repo-type | The type of the git repo including github, gitlab, and gitee. Default to github. |

| base-url | The base url of the gitlab server. |

| auth-type | The auth type of the gitlab server. |

| project-id | The project id of a gitlab repo. |

| insecure-registry | If the image registy is insecure, you should set this to true. |

13 - OpenFunction Events

13.1 - Introduction

Overview

OpenFunction Events is OpenFunction’s event management framework. It provides the following core features:

- Support for triggering target functions by synchronous and asynchronous calls

- User-defined trigger judgment logic

- The components of OpenFunction Events can be driven by OpenFunction itself

Architecture

The following diagram illustrates the architecture of OpenFunction Events.

Concepts

EventSource

EventSource defines the producer of an event, such as a Kafka service, an object storage service, and even a function. It contains descriptions of these event producers and information about where to send these events.

EventSource supports the following types of event source server:

- Kafka

- Cron (scheduler)

- Redis

EventBus (ClusterEventBus)

EventBus is responsible for aggregating events and making them persistent. It contains descriptions of an event bus broker that usually is a message queue (such as NATS Streaming and Kafka), and provides these configurations for EventSource and Trigger.

EventBus handles event bus adaptation for namespace scope by default. For cluster scope, ClusterEventBus is available as an event bus adapter and takes effect when other components cannot find an EventBus under a namespace.

EventBus supports the following event bus broker:

- NATS Streaming

Trigger

Trigger is an abstraction of the purpose of an event, such as what needs to be done when a message is received. It contains the purpose of an event defined by you, which tells the trigger which EventSource it should fetch the event from and subsequently determine whether to trigger the target function according to the given conditions.

Reference

For more information, see EventSource Specifications and EventBus Specifications.

13.2 - Use EventSource

This document gives an example of how to use an event source to trigger a synchronous function.

In this example, an EventSource is defined for synchronous invocation to use the event source (a Kafka server) as an input bindings of a function (a Knative service). When the event source generates an event, it will invoke the function and get a synchronous return through the spec.sink configuration.

Create a Function

Use the following content to create a function as the EventSource Sink. For more information about how to create a function, see Create sync functions.

apiVersion: core.openfunction.io/v1beta2

kind: Function

metadata:

name: sink

spec:

version: "v1.0.0"

image: "openfunction/sink-sample:latest"

serving:

template:

containers:

- name: function

imagePullPolicy: Always

triggers:

http:

port: 8080

After the function is created, run the following command to get the URL of the function.

Note

In the URL of the function, theopenfunction is the name of the Kubernetes Service and the io is the namespace where the Kubernetes Service runs. For more information, see Namespaces of Services.$ kubectl get functions.core.openfunction.io

NAME BUILDSTATE SERVINGSTATE BUILDER SERVING URL AGE

sink Skipped Running serving-4x5wh https://openfunction.io/default/sink 13s

Create a Kafka Cluster

Run the following commands to install strimzi-kafka-operator in the default namespace.

helm repo add strimzi https://strimzi.io/charts/ helm install kafka-operator -n default strimzi/strimzi-kafka-operatorUse the following content to create a file

kafka.yaml.apiVersion: kafka.strimzi.io/v1beta2 kind: Kafka metadata: name: kafka-server namespace: default spec: kafka: version: 3.3.1 replicas: 1 listeners: - name: plain port: 9092 type: internal tls: false - name: tls port: 9093 type: internal tls: true config: offsets.topic.replication.factor: 1 transaction.state.log.replication.factor: 1 transaction.state.log.min.isr: 1 default.replication.factor: 1 min.insync.replicas: 1 inter.broker.protocol.version: "3.1" storage: type: ephemeral zookeeper: replicas: 1 storage: type: ephemeral entityOperator: topicOperator: {} userOperator: {} --- apiVersion: kafka.strimzi.io/v1beta2 kind: KafkaTopic metadata: name: events-sample namespace: default labels: strimzi.io/cluster: kafka-server spec: partitions: 10 replicas: 1 config: retention.ms: 7200000 segment.bytes: 1073741824Run the following command to deploy a 1-replica Kafka server named

kafka-serverand 1-replica Kafka topic namedevents-samplein the default namespace. The Kafka and Zookeeper clusters created by this command have a storage type of ephemeral and are demonstrated using emptyDir.kubectl apply -f kafka.yamlRun the following command to check pod status and wait for Kafka and Zookeeper to be up and running.

$ kubectl get po NAME READY STATUS RESTARTS AGE kafka-server-entity-operator-568957ff84-nmtlw 3/3 Running 0 8m42s kafka-server-kafka-0 1/1 Running 0 9m13s kafka-server-zookeeper-0 1/1 Running 0 9m46s strimzi-cluster-operator-687fdd6f77-cwmgm 1/1 Running 0 11mRun the following command to view the metadata of the Kafka cluster.

kafkacat -L -b kafka-server-kafka-brokers:9092

Trigger a Synchronous Function

Create an EventSource

Use the following content to create an EventSource configuration file (for example,

eventsource-sink.yaml).Note

- The following example defines an event source named

my-eventsourceand mark the events generated by the specified Kafka server assample-oneevents. spec.sinkreferences the target function (Knative service) created in the prerequisites.

apiVersion: events.openfunction.io/v1alpha1 kind: EventSource metadata: name: my-eventsource spec: logLevel: "2" kafka: sample-one: brokers: "kafka-server-kafka-brokers.default.svc.cluster.local:9092" topic: "events-sample" authRequired: false sink: uri: "http://openfunction.io.svc.cluster.local/default/sink"- The following example defines an event source named

Run the following command to apply the configuration file.

kubectl apply -f eventsource-sink.yamlRun the following commands to check the results.

$ kubectl get eventsources.events.openfunction.io NAME EVENTBUS SINK STATUS my-eventsource Ready $ kubectl get components NAME AGE serving-8f6md-component-esc-kafka-sample-one-r527t 68m serving-8f6md-component-ts-my-eventsource-default-wz8jt 68m $ kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE serving-8f6md-deployment-v100-pg9sd 1/1 1 1 68mNote

In this example of triggering a synchronous function, the workflow of the EventSource controller is described as follows:

- Create an EventSource custom resource named

my-eventsource. - Create a Dapr component named

serving-xxxxx-component-esc-kafka-sample-one-xxxxxto enable the EventSource to associate with the event source. - Create a Dapr component named

serving-xxxxx-component-ts-my-eventsource-default-xxxxxenable the EventSource to associate with the sink function. - Create a Deployment named

serving-xxxxx-deployment-v100-xxxxx-xxxxxxxxxx-xxxxxfor processing events.

- Create an EventSource custom resource named

Create an event producer

To start the target function, you need to create some events to trigger the function.

Use the following content to create an event producer configuration file (for example,

events-producer.yaml).apiVersion: core.openfunction.io/v1beta1 kind: Function metadata: name: events-producer spec: version: "v1.0.0" image: openfunctiondev/v1beta1-bindings:latest serving: template: containers: - name: function imagePullPolicy: Always runtime: "async" inputs: - name: cron component: cron outputs: - name: target component: kafka-server operation: "create" bindings: cron: type: bindings.cron version: v1 metadata: - name: schedule value: "@every 2s" kafka-server: type: bindings.kafka version: v1 metadata: - name: brokers value: "kafka-server-kafka-brokers:9092" - name: topics value: "events-sample" - name: consumerGroup value: "bindings-with-output" - name: publishTopic value: "events-sample" - name: authRequired value: "false"Run the following command to apply the configuration file.

kubectl apply -f events-producer.yamlRun the following command to check the results in real time.

$ kubectl get po --watch NAME READY STATUS RESTARTS AGE serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 0/2 ContainerCreating 0 1s serving-8f6md-deployment-v100-pg9sd-6666c5577f-4rpdg 2/2 Running 0 23m serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 0/2 ContainerCreating 0 1s serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 1/2 Running 0 5s serving-k6zw8-deployment-v100-fbtdc-dc96c4589-s25dh 2/2 Running 0 8s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 Pending 0 0s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 Pending 0 0s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 ContainerCreating 0 0s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 0/2 ContainerCreating 0 2s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 1/2 Running 0 4s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 1/2 Running 0 4s serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-8n6mk 2/2 Running 0 4s

13.3 - Use EventBus and Trigger

This document gives an example of how to use EventBus and Trigger.

Prerequisites

- You need to create a function as the target function to be triggered. Please refer to Create a function for more details.

- You need to create a Kafka cluster. Please refer to Create a Kafka cluster for more details.

Deploy an NATS streaming server

Run the following commands to deploy an NATS streaming server. This document uses nats://nats.default:4222 as the access address of the NATS streaming server and stan as the cluster ID. For more information, see NATS Streaming (STAN).

helm repo add nats https://nats-io.github.io/k8s/helm/charts/

helm install nats nats/nats

helm install stan nats/stan --set stan.nats.url=nats://nats:4222

Create an OpenFuncAsync Runtime Function

Use the following content to create a configuration file (for example,

openfuncasync-function.yaml) for the target function, which is triggered by the Trigger CRD and prints the received message.apiVersion: core.openfunction.io/v1beta2 kind: Function metadata: name: trigger-target spec: version: "v1.0.0" image: openfunctiondev/v1beta1-trigger-target:latest serving: scaleOptions: keda: scaledObject: pollingInterval: 15 minReplicaCount: 0 maxReplicaCount: 10 cooldownPeriod: 30 triggers: - type: stan metadata: natsServerMonitoringEndpoint: "stan.default.svc.cluster.local:8222" queueGroup: "grp1" durableName: "ImDurable" subject: "metrics" lagThreshold: "10" triggers: dapr: - name: eventbus topic: metrics pubsub: eventbus: type: pubsub.natsstreaming version: v1 metadata: - name: natsURL value: "nats://nats.default:4222" - name: natsStreamingClusterID value: "stan" - name: subscriptionType value: "queue" - name: durableSubscriptionName value: "ImDurable" - name: consumerID value: "grp1"Run the following command to apply the configuration file.

kubectl apply -f openfuncasync-function.yaml

Create an EventBus and an EventSource

Use the following content to create a configuration file (for example,

eventbus.yaml) for an EventBus.apiVersion: events.openfunction.io/v1alpha1 kind: EventBus metadata: name: default spec: natsStreaming: natsURL: "nats://nats.default:4222" natsStreamingClusterID: "stan" subscriptionType: "queue" durableSubscriptionName: "ImDurable"Use the following content to create a configuration file (for example,

eventsource.yaml) for an EventSource.Note

Set the name of the event bus throughspec.eventBus.apiVersion: events.openfunction.io/v1alpha1 kind: EventSource metadata: name: my-eventsource spec: logLevel: "2" eventBus: "default" kafka: sample-two: brokers: "kafka-server-kafka-brokers.default.svc.cluster.local:9092" topic: "events-sample" authRequired: falseRun the following commands to apply these configuration files.

kubectl apply -f eventbus.yaml kubectl apply -f eventsource.yamlRun the following commands to check the results.

$ kubectl get eventsources.events.openfunction.io NAME EVENTBUS SINK STATUS my-eventsource default Ready $ kubectl get eventbus.events.openfunction.io NAME AGE default 6m53s $ kubectl get components NAME AGE serving-6r5dl-component-eventbus-jlpqf 11m serving-9689d-component-ebfes-my-eventsource-cmcbw 6m57s serving-9689d-component-esc-kafka-sample-two-l99cg 6m57s serving-k6zw8-component-cron-9x8hl 61m serving-k6zw8-component-kafka-server-sjrzs 61m $ kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE serving-6r5dl-deployment-v100-m7nq2 0/0 0 0 12m serving-9689d-deployment-v100-5qdvk 1/1 1 1 7m17sNote

In the case of using the event bus, the workflow of the EventSource controller is described as follows:

- Create an EventSource custom resource named

my-eventsource. - Retrieve and reorganize the configuration of the EventBus, including the EventBus name (

defaultin this example) and the name of the Dapr component associated with the EventBus. - Create a Dapr component named

serving-xxxxx-component-ebfes-my-eventsource-xxxxxto enable the EventSource to associate with the event bus. - Create a Dapr component named

serving-xxxxx-component-esc-kafka-sample-two-xxxxxto enable the EventSource to associate with the event source. - Create a Deployment named

serving-xxxxx-deployment-v100-xxxxxfor processing events.

- Create an EventSource custom resource named

Create a Trigger

Use the following content to create a configuration file (for example,

trigger.yaml) for a Trigger.Note

- Set the event bus associated with the Trigger through

spec.eventBus. - Set the event input source through

spec.inputs. - This is a simple trigger that collects events from the EventBus named

default. When it retrieves asample-twoevent from the EventSourcemy-eventsource, it triggers a Knative service namedfunction-sample-serving-qrdx8-ksvc-fwml8and sends the event to the topicmetricsof the event bus at the same time.

apiVersion: events.openfunction.io/v1alpha1 kind: Trigger metadata: name: my-trigger spec: logLevel: "2" eventBus: "default" inputs: inputDemo: eventSource: "my-eventsource" event: "sample-two" subscribers: - condition: inputDemo topic: "metrics"- Set the event bus associated with the Trigger through

Run the following command to apply the configuration file.

kubectl apply -f trigger.yamlRun the following commands to check the results.

$ kubectl get triggers.events.openfunction.io NAME EVENTBUS STATUS my-trigger default Ready $ kubectl get eventbus.events.openfunction.io NAME AGE default 62m $ kubectl get components NAME AGE serving-9689d-component-ebfes-my-eventsource-cmcbw 46m serving-9689d-component-esc-kafka-sample-two-l99cg 46m serving-dxrhd-component-eventbus-t65q7 13m serving-zwlj4-component-ebft-my-trigger-4925n 100sNote

In the case of using the event bus, the workflow of the Trigger controller is as follows:

- Create a Trigger custom resource named

my-trigger. - Retrieve and reorganize the configuration of the EventBus, including the EventBus name (

defaultin this example) and the name of the Dapr component associated with the EventBus. - Create a Dapr component named

serving-xxxxx-component-ebft-my-trigger-xxxxxto enable the Trigger to associatie with the event bus. - Create a Deployment named

serving-xxxxx-deployment-v100-xxxxxfor processing trigger tasks.

- Create a Trigger custom resource named

Create an Event Producer

Use the following content to create an event producer configuration file (for example,

events-producer.yaml).apiVersion: core.openfunction.io/v1beta2 kind: Function metadata: name: events-producer spec: version: "v1.0.0" image: openfunctiondev/v1beta1-bindings:latest serving: template: containers: - name: function imagePullPolicy: Always triggers: dapr: - name: cron type: bindings.cron outputs: - dapr: name: kafka-server operation: "create" bindings: cron: type: bindings.cron version: v1 metadata: - name: schedule value: "@every 2s" kafka-server: type: bindings.kafka version: v1 metadata: - name: brokers value: "kafka-server-kafka-brokers:9092" - name: topics value: "events-sample" - name: consumerGroup value: "bindings-with-output" - name: publishTopic value: "events-sample" - name: authRequired value: "false"Run the following command to apply the configuration file.

kubectl apply -f events-producer.yamlRun the following commands to observe changes of the target asynchronous function.

$ kubectl get functions.core.openfunction.io NAME BUILDSTATE SERVINGSTATE BUILDER SERVING URL AGE trigger-target Skipped Running serving-dxrhd 20m $ kubectl get po --watch NAME READY STATUS RESTARTS AGE serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 0/2 Pending 0 0s serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 0/2 Pending 0 0s serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 0/2 ContainerCreating 0 0s serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 0/2 ContainerCreating 0 2s serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 1/2 Running 0 4s serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 1/2 Running 0 4s serving-dxrhd-deployment-v100-xmrkq-785cb5f99-6hclm 2/2 Running 0 4s

13.4 - Use Multiple Sources in One EventSource

This document describes how to use multiple sources in one EventSource.

Prerequisites

- You need to create a function as the target function to be triggered. Please refer to Create a function for more details.

- You need to create a Kafka cluster. Please refer to Create a Kafka cluster for more details.

Use Multiple Sources in One EventSource

Use the following content to create an EventSource configuration file (for example,

eventsource-multi.yaml).Note

- The following example defines an event source named

my-eventsourceand mark the events generated by the specified Kafka server assample-three. spec.sinkreferences the target function (Knative service).- The configuration of

spec.cronis to trigger the function defined inspec.sinkevery 5 seconds.

apiVersion: events.openfunction.io/v1alpha1 kind: EventSource metadata: name: my-eventsource spec: logLevel: "2" kafka: sample-three: brokers: "kafka-server-kafka-brokers.default.svc.cluster.local:9092" topic: "events-sample" authRequired: false cron: sample-three: schedule: "@every 5s" sink: uri: "http://openfunction.io.svc.cluster.local/default/sink"- The following example defines an event source named

Run the following command to apply the configuration file.

kubectl apply -f eventsource-multi.yamlRun the following commands to observe changes.

$ kubectl get eventsources.events.openfunction.io NAME EVENTBUS SINK STATUS my-eventsource Ready $ kubectl get components NAME AGE serving-vqfk5-component-esc-cron-sample-three-dzcpv 35s serving-vqfk5-component-esc-kafka-sample-one-nr9pq 35s serving-vqfk5-component-ts-my-eventsource-default-q6g6m 35s $ kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE serving-4x5wh-ksvc-wxbf2-v100-deployment 1/1 1 1 3h14m serving-vqfk5-deployment-v100-vdmvj 1/1 1 1 48s

13.5 - Use ClusterEventBus

This document describes how to use a ClusterEventBus.

Prerequisites

You have finished the steps described in Use EventBus and Trigger.

Use a ClusterEventBus

Use the following content to create a ClusterEventBus configuration file (for example,

clustereventbus.yaml).apiVersion: events.openfunction.io/v1alpha1 kind: ClusterEventBus metadata: name: default spec: natsStreaming: natsURL: "nats://nats.default:4222" natsStreamingClusterID: "stan" subscriptionType: "queue" durableSubscriptionName: "ImDurable"Run the following command to delete EventBus.

kubectl delete eventbus.events.openfunction.io defaultRun the following command to apply the configuration file.

kubectl apply -f clustereventbus.yamlRun the following commands to check the results.

$ kubectl get eventbus.events.openfunction.io No resources found in default namespace. $ kubectl get clustereventbus.events.openfunction.io NAME AGE default 21s

13.6 - Use Trigger Conditions

This document describes how to use Trigger conditions.

Prerequisites

You have finished the steps described in Use EventBus and Trigger.

Use Trigger Conditions

Create two event sources

Use the following content to create an EventSource configuration file (for example,

eventsource-a.yaml).apiVersion: events.openfunction.io/v1alpha1 kind: EventSource metadata: name: eventsource-a spec: logLevel: "2" eventBus: "default" kafka: sample-five: brokers: "kafka-server-kafka-brokers.default.svc.cluster.local:9092" topic: "events-sample" authRequired: falseUse the following content to create another EventSource configuration file (for example,

eventsource-b.yaml).apiVersion: events.openfunction.io/v1alpha1 kind: EventSource metadata: name: eventsource-b spec: logLevel: "2" eventBus: "default" cron: sample-five: schedule: "@every 5s"Run the following commands to apply these two configuration files.

kubectl apply -f eventsource-a.yaml kubectl apply -f eventsource-b.yaml

Create a trigger with condition

Use the following content to create a configuration file (for example,

condition-trigger.yaml) for a Trigger withcondition.apiVersion: events.openfunction.io/v1alpha1 kind: Trigger metadata: name: condition-trigger spec: logLevel: "2" eventBus: "default" inputs: eventA: eventSource: "eventsource-a" event: "sample-five" eventB: eventSource: "eventsource-b" event: "sample-five" subscribers: - condition: eventB sink: uri: "http://openfunction.io.svc.cluster.local/default/sink" - condition: eventA && eventB topic: "metrics"Note

In this example, two input sources and two subscribers are defined, and their triggering relationship is described as follows:

- When input

eventBis received, the input event is sent to the Knative service. - When input

eventBand inputeventAare received, the input event is sent to the metrics topic of the event bus and sent to the Knative service at the same time.

- When input

Run the following commands to apply the configuration files.

kubectl apply -f condition-trigger.yamlRun the following commands to check the results.

$ kubectl get eventsources.events.openfunction.io NAME EVENTBUS SINK STATUS eventsource-a default Ready eventsource-b default Ready $ kubectl get triggers.events.openfunction.io NAME EVENTBUS STATUS condition-trigger default Ready $ kubectl get eventbus.events.openfunction.io NAME AGE default 12sRun the following command and you can see from the output that the

eventBcondition in the Trigger is matched and the Knative service is triggered because the event sourceeventsource-bis a cron task.$ kubectl get functions.core.openfunction.io NAME BUILDSTATE SERVINGSTATE BUILDER SERVING URL AGE sink Skipped Running serving-4x5wh https://openfunction.io/default/sink 3h25m $ kubectl get po NAME READY STATUS RESTARTS AGE serving-4x5wh-ksvc-wxbf2-v100-deployment-5c495c84f6-k2jdg 2/2 Running 0 46sCreate an event producer by referring to Create an Event Producer.

Run the following command and you can see from the output that the

eventA && eventBcondition in the Trigger is matched and the event is sent to themetricstopic of the event bus at the same time. The OpenFuncAsync function is triggered.$ kubectl get functions.core.openfunction.io NAME BUILDSTATE SERVINGSTATE BUILDER SERVING URL AGE trigger-target Skipped Running serving-7hghp 103s $ kubectl get po NAME READY STATUS RESTARTS AGE serving-7hghp-deployment-v100-z8wrf-946b4854d-svf55 2/2 Running 0 18s